Archived information

Information identified as archived is provided for reference, research or recordkeeping purposes. It is not subject to the Government of Canada Web Standards and has not been altered or updated since it was archived. Please contact us to request a format other than those available.

Development Effectiveness Review of the World Health Organization

2007-2010

Final Report - December 2012

Table of Contents

- Acknowledgements

- Abbreviations, Acronyms and Symbols

- Executive Summary

- 1.0 Introduction

- 2.0 Methodology

- 3.0 Findings on the Development Effectiveness of WHO

- 3.1 WHO programs appear relevant to stakeholder needs and national priorities

- 3.2 The WHO appears to be effective in achieving its development objectives and expected results

- 3.3 Benefits of WHO programs appear to be sustainable but there are challenges in sustaining the capacity of partners

- 3.4 WHO evaluations did not address efficiency

- 3.5 WHO evaluations did not address gender equality and environmental sustainability

- 3.6 Evaluations report weaknesses in systems for monitoring and evaluation

- 4.0 WHO and Canada’s Priorities in International Development

- 5.0 Conclusions

- 6.0 Recommendations for CIDA

- Annex 1: Criteria Used to Assess Development Effectiveness

- Annex 2: Evaluation Sample

- Annex 3: Approach and Methodology

- Annex 4: Evaluation Quality—Scoring Guide and Results

- Annex 5: Guide for Review Team to Classify Evaluation Findings

- Annex 6: Corporate Documents Reviewed

- Annex 7: CIDA Funding to Multilateral Humanitarian and Development Organizations

- Annex 8: Management Response

Acknowledgments

CIDA’s Evaluation Division wishes to thank all who contributed to this review for their valued input, their constant and generous support, and their patience.

Our thanks go first to the independent team from the firm, Goss Gilroy Inc., made up of team leader Ted Freeman, and analysts Danielle Hoegy and Tasha Truant. We are also grateful for the support of the Department for International Development of the United Kingdom and the Swedish Agency for Development Evaluation, which provided analytical support during the reviews of the World Health Organization (WHO) and the Asian Development Bank.

The Evaluation Division would also like to thank the management team of CIDA’s Global Initiative Directorate (Multilateral and Global Programs Branch) at Headquarters in Gatineau for its valuable support.

Our thanks also go to the representatives of the WHO for their helpfulness and their useful, practical advice to the evaluators.

From CIDA’s Evaluation Division, we wish to thank Vivek Prakash, Evaluation Officer, for his assistance with the review. We also thank Michelle Guertin, CIDA Evaluation Manager, for guiding this review to completion and for her contribution to the report.

Caroline Leclerc

Director General

Strategic Planning, Performance and Evaluation Directorate

List of Abbreviations

- CAH

- Child and Adolescent Health and Development

- CIDA

- Canadian International Development Agency

- DAC-EVALNET

- Network for Development Evaluation of the Development Assistance Committee

- EPI

- Expanded Programme on Immunization

- HAC

- Health Action in Crises

- MDG

- Millennium Development Goals

- MO

- Multilateral Organization

- MOPAN

- Multilateral Organization Performance Assessment Network

- NGO

- Non-Governmental Organization

- OECD

- Organisation for Economic Co-operation and Development

- OIOS

- Office of Internal Oversight Services

- RBM

- Results-Based Management

- UN

- United Nations

- WHO

- World Health Organization

- USD

- United States Dollars

- VPD

- Vaccine-preventable diseases

Executive Summary

Background

This report presents the results of a development effectiveness review of the World Health Organization (WHO). Founded in 1948, the WHO is the directing and coordinating authority on international health within the United Nations System with the overall goal of achieving the highest level of health for all. It does not directly provide health services, but instead coordinates global health-related efforts and establishes global health norms. The WHO employs over 8,000 public health experts, including doctors, epidemiologists, scientists, managers, administrators and other professionals. These health experts work in 147 country offices, six regional offices and at the headquarters in Geneva.

While poverty reduction is not the primary focus of the WHO’s mandate, it does contribute to poverty reduction through its global leadership—for example, establishing global health standards and norms which are used by developing countries and by supporting humanitarian coordination—and through its technical assistance in developing countries.

Health Canada has the overall substantive lead for the Government of Canada’s engagement with the WHO, and is head of the Canadian delegation to the World Health Assembly. The Canadian International Development Agency (CIDA)’s main engagements with the WHO include policy dialogue and development assistance programming in infectious diseases, child health, and humanitarian assistance. More specifically, the WHO also plays a key role in developing health indicators and data collection in support of the G8 Initiative on Maternal, Newborn and Child Health championed by Canada.

With 284 million Canadian dollars of CIDA support in the four fiscal years from 2007–2008 to 2010–2011, the WHO ranks eighth among multilateral organizations supported by CIDA in dollar terms. In the area of health, only the Global Fund to Fight AIDS, Tuberculosis and Malaria (GFATM) ranks higher with 450 million Canadian dollars of support from CIDA in the same period.

Purpose

The review is intended to provide an independent, evidence-based assessment of the development effectiveness (hereafter referred to as effectiveness) of WHO programs to satisfy evaluation requirements established by the Government of Canada’s Policy on Evaluation and to provide the CIDA’s Multilateral and Global Programs Branch with evidence on the development effectiveness of the WHO.

Approach and Methodology

The approach and methodology for this review was developed under the guidance of the Organisation for Economic Co-operation and Development (OECD)’s Development Assistance Committee (DAC) Network on Development Evaluation (DAC-EVALNET). Two pilot tests, on the WHO and the Asian Development Bank, were conducted in 2010 during the development phase of the common approach and methodology. The report relies, therefore, on the pilot test analysis of evaluation reports published by the WHO’s Office of Internal Oversight Services (OIOS), supplemented with a review of WHO and CIDA corporate documents, and consultation with the CIDA manager responsible for managing relations with the WHO.

The methodology does not rely on a particular definition of (development) effectiveness. The Management Group and the Task Team that were created by the DAC-EVALNET to develop the methodology had previously considered whether an explicit definition was needed. In the absence of an agreed-upon definition, the methodology focuses on some of the essential characteristics of developmentally effective multilateral organization programming, as described below:

- Relevance of interventions: Programming activities and outputs are relevant to the needs of the target group and its members;

- Achievement of Development Objectives and Expected Results: The programming contributes to the achievement of development objectives and expected results at the national and local level in developing countries (including positive impacts for target group members);

- Sustainability of Results/Benefits: The benefits experienced by target group members and the results achieved are sustainable in the future;

- Efficiency: The programming is delivered in a timely and cost-efficient manner;

- Crosscutting Themes (Environmental Sustainability and Gender Equality): The programming is inclusive in that it would support gender equality and would be environmentally sustainable (thereby not compromising the development prospects in the future); and

- Using Evaluation and Monitoring to Improve Effectiveness: The programming enables effective development by allowing participating and supporting organizations to learn from experience and uses performance management and accountability tools, such as evaluation and monitoring, to improve effectiveness over time.

Based on the above-mentioned characteristics, the review’s methodology uses a common set of assessment criteria derived from the DAC’s existing evaluation criteria (Annex 1). The overall approach and methodologyFootnote 1 was endorsed by the members of the DAC-EVALNET in June 2011 as an acceptable approach for assessing the development effectiveness of multilateral organizations.

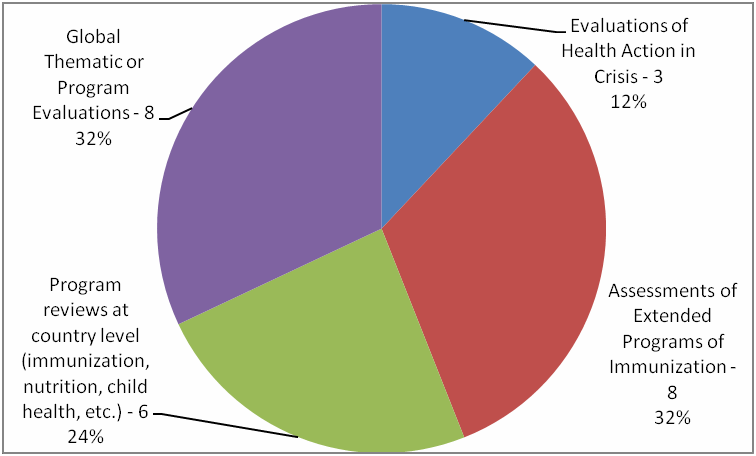

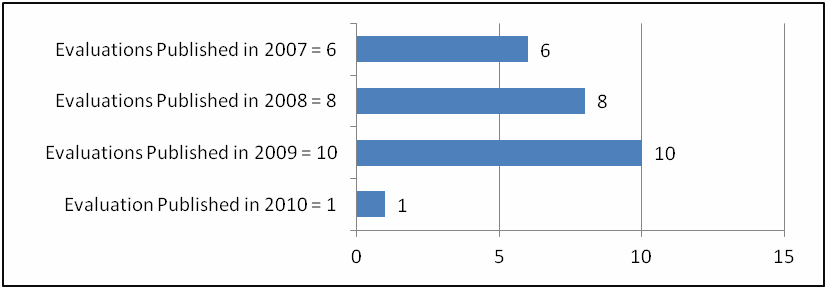

The review involved a structured meta-synthesis of a sample of 25 WHO evaluations completed between 2007 and 2010, at the country, regional and global/thematic level. The sampling process is described further in Annex 3. The limited number of available evaluation reports represents a limitation for this report, as discussed below.

After being screened for quality (Annex 4 describes approach and criteria used), each evaluation was reviewed to identify findings relating to the six main criteria (and associated sub-criteria described in Annex 1) to assess effectiveness. The review team classified findings for each criterion using a four-point scale as “highly satisfactory,” “satisfactory,” “unsatisfactory” or “highly unsatisfactory.” Classification of findings was guided by a grid with specific instructions for each rating across all sub-criteria (Annex 5). The review team also identified factors contributing to or detracting from results.

Note that although no evaluations were screened out due to quality concerns, evaluations do not address all the criteria identified as essential elements for effective development. Therefore, this review examines the data available on each criterion before presenting results, and does not present results for some criteria.

The percentages shown in this report are based on the total number of evaluations that addressed the sub-criteria. However, coverage of the different sub-criteria in the evaluations reviewed varies from strong to weak. Cautionary notes are provided in the report when coverage warrants it.

In addition to the 25 evaluations, the review examined relevant WHO policy and reporting documents such as the reports of the Programme, Budget and Administration Committee to the Executive Board, Reports on WHO Reform by the Director-General, Evaluation Policy Documents, Annual Reports and the Interim Assessment of the Medium-Term Strategic Plan (see Annex 6). These documents allowed the review team to assess the ongoing evolution of evaluation and results reporting at the WHO and to put in context the findings reported in the evaluation reports.

The review team also carried out an interview with OIOS staff at the WHO to understand better the universe of available WHO evaluation reports and to put in context the changing situation of the evaluation function. Finally, the review team interviewed the CIDA manager most directly responsible for the ongoing relationship between CIDA and the WHO in order to better assess the WHO’s contribution to Canada’s international development priorities.

As with any meta-synthesis, there are methodological challenges that limit the findings. For this review, the most important limitation concerns the generalization of this review’s results to all of the WHO’s programming. The set of available and valid evaluation reports does not provide, on balance, enough coverage of WHO programs and activities in the period to allow for generalization of the results to the WHO’s programming as a whole.Footnote 2 The available evaluation reports do, however, provide insights into the development effectiveness of evaluated WHO programs.

Key Findings

Insufficient evidence available to make conclusions about the World Health Organization

The major finding of this review is that the limited set of available and valid evaluation reports means that there is not enough information to draw conclusions about the WHO’s development effectiveness.

The limited number of evaluation reports that are available provide some insights into the effectiveness of those WHO programs. Results from the review of these evaluations are presented below, but cannot be generalized to the organization as a whole.

An analysis of the 2012 WHO evaluation policy indicates that while the approval of an evaluation policy represents a positive step, gaps remain in the policy regarding the planning, prioritizing, budgeting and disclosure of WHO evaluations. In addition, the WHO could further clarify the roles and responsibilities of program managers regarding evaluations, and provide guidance to judge the quality of evaluations.

A 2012 United Nations Joint Inspection Unit review also raises concerns about independence and credibility of WHO evaluations. It suggests that the WHO should have a stronger central evaluation capacity, and recommends that a peer review on the evaluation function be conducted by the United Nations Evaluation Group and be presented to the WHO Executive Board by 2014.

Based on the limited sample available, WHO programs appear to be relevant to stakeholder needs and national priorities. Evaluations reported that WHO programs are well- suited to the needs of stakeholders, with 89% of evaluations (16 of 18 evaluations which address this criteria) reporting satisfactory or highly satisfactory findings, and well aligned with national development goals (100% of 12 evaluations which address this criteria were rated satisfactory or highly satisfactory). Further, the objectives of WHO-supported projects and programs remain valid over time (100% of 21 evaluations rated satisfactory or better). There is room, however, for better description of the scale of WHO program activities in relation to their objectives (60% of 20 evaluations rated satisfactory) and for more effective partnerships with governments (61% of 18 evaluations rated satisfactory or highly satisfactory).

One factor contributing to the relevance of WHO programs is the organization’s experience in matching program design to the burden of disease in partner countries. Another is consultations with key stakeholders at national and local levels during program design.

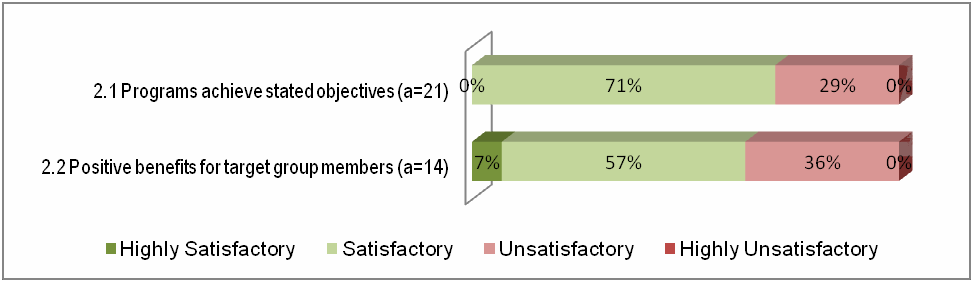

The WHO appears to be effective in achieving most of its development objectives and expected resultswith 71% (15/21) of evaluations reporting performance as satisfactory or better. In addition, WHO programs generate benefits for target group members at the individual/household/community level with 64% of 14 evaluations rating performance for this sub-criterion as satisfactory or highly satisfactory. However, evaluations do not consistently report on the number of beneficiaries who benefited from interventions, and no results are reported for this sub-criterion. Factors contributing to performance in objectives achievement for the WHO include strong technical design of program interventions and high levels of national ownership for key programs.

The benefits of WHO programs appear to be sustainable, but there are challenges in sustaining the capacity of its partners. The benefits of WHO programs are likely to be sustained with 73% of evaluations reporting satisfactory or highly satisfactory results in this area (although only 11 evaluations address this criteria). However, the WHO does face a challenge in the area of building its partners’ institutional capacity for sustainability. Only 37% (6/16) of evaluations found WHO programs satisfactory in terms of providing support to local institutional capacity for sustainability. One factor contributing to sustainability has been the use of local networks of service providers to sustain the success of immunization programs.

Efficiency—No Results to Report. Only a few evaluations reported on cost efficiency (9) and on whether implementation of programs and achievement of objectives was timely (5). Evaluation reports that addressed these sub-criteria most often reported factors detracting from efficiency. A common feature of these findings was a link between delays in program implementation and increased costs.

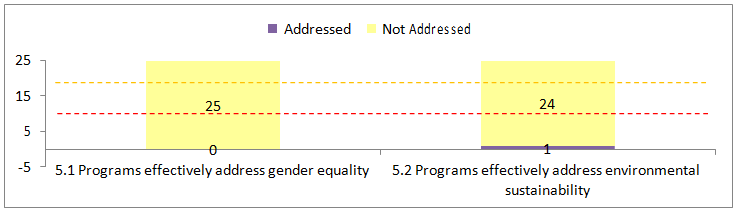

WHO evaluations have not regularly addressed effectiveness in supporting gender equality or environmental sustainability. No evaluations reported on the crosscutting issue of gender equality, and only one reported on environmental sustainability, which prevented the review from identifying any results in this area. The absence of gender equality as an issue in WHO evaluations represents a critical gap in effectiveness information for the organization.

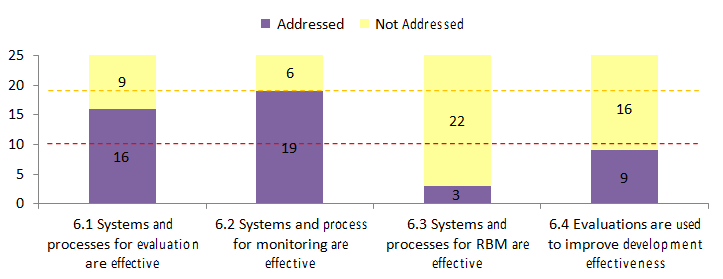

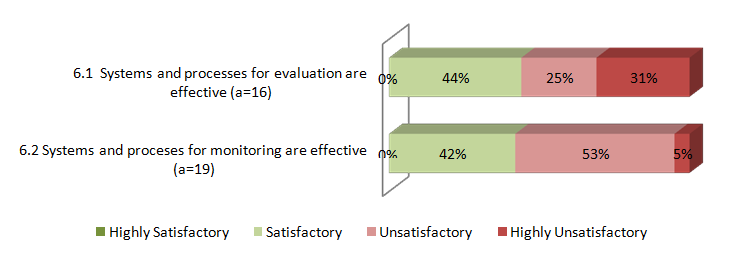

Evaluations reviewed have found WHO systems for evaluation and monitoring to be unsatisfactory. A total of 56% of reported findings on the effectiveness of evaluation systems and processes were classified as unsatisfactory or highly unsatisfactory (9 of 16 evaluations). Similarly, systems for monitoring are unsatisfactory, with 58% (11 of 19) of evaluations’ findings classified as unsatisfactory or highly unsatisfactory. Sub-criteria on effective systems and processes for results-based management and evaluation results used to improve development effectiveness were addressed by only 3 and 9 evaluations, respectively. Therefore, no results are presented for these sub-criteria.

In particular, the evaluations reviewed point to a lack of financial resources and trained local staff as important factors contributing to less-than-satisfactory results in the area of evaluation and monitoring. Where evaluation systems are reported as satisfactory, one contributing factor has been the tradition of joint review of program implementation by the WHO and its partners.

Conclusions: Development Effectiveness of WHO

The evaluation function of the WHO needs strengthening: available evaluation reports do not, on balance, provide enough coverage of WHO programs and activities in the period to allow for generalization of the results to the WHO’s programming as a whole but provide insights into the development effectiveness of evaluated WHO programs.

Performance: Evaluations carried out between 2007 and 2010 indicate that the WHO’s activities are highly relevant to the needs of target group members (16 of 18 evaluations) and are well aligned with national government objectives and priorities (12 of 12 evaluations). In addition, WHO projects in the period under review have achieved their development objectives (15 of 21 evaluations) and resulted in positive benefits for target group members (9 of 14 evaluations). The direct benefits of WHO programming are reported as sustainable in most of the evaluations (8 of 11) that address this issue, although there are persistent challenges regarding the institutional capacity for sustainability of program arrangements (only 6 of 16 evaluations rated well).

Shortcomings: While most WHO programs reviewed have been able to achieve their direct development objectives, the level of expenditure coverage provided by the organization’s evaluations is quite low. Additionally, WHO evaluations were often operationally and technically focused and, while well designed within their own parameters, they did not describe resulting changes for the target or beneficiary group. The evaluation function requires significant strengthening in order to cover WHO programs and projects, and to provide more confidence that the findings reported can be generalized to the organization. Similarly, WHO evaluations have not systematically reviewed the effectiveness of its programs in contributing to gender equality.

In an effort to strengthen the evaluation system at the WHO, the Executive Board approved the implementation of a new evaluation policy at its 131th session, held May 28–29, 2012, as part of the organization’s management reform.

WHO contributes to Canada’s Development Priorities. There is clear evidence that the WHO makes an important direct contribution to the Canadian international development priorities such as increasing food security (especially for pregnant and lactating women, for children and for those affected by crises) and securing the future of children and youth. There is also evidence that WHO activities contribute indirectly to sustainable economic growth through the support of public health systems and by assisting developing countries to reduce the burden of communicable and non-communicable diseases.

Recommendations to CIDA

This section contains the recommendations to CIDA based on the findings and conclusions of this effectiveness review of the WHO. Aimed at improving evaluation and results-based management at the WHO, these recommendations are in line with the objectives of Canada’s existing engagements with the WHO. As one of several stakeholders working with the WHO, Canada’s individual influence on the organization is limited and it may need to engage with other shareholders to implement these recommendations. (See Annex 8 for CIDA’s management response.)

- Canada should monitor efforts at reforming the evaluation function at the WHO as the new policy on evaluation is implemented. In particular, CIDA should use its influence at the Executive Board and with other donor agencies to advocate for a sufficiently resourced and capable evaluation function that can provide good coverage of WHO programming over time.

- CIDA should monitor the implementation of the evaluation policy so that future WHO evaluations sufficiently address gender equality.

- CIDA should encourage the WHO to implement a system for publishing regular (possibly annual) reports on development effectiveness that builds on the work of the reformed evaluation function. In general, there is a need to strengthen the WHO commitment to reporting on the effectiveness of programs.

- CIDA should encourage the WHO to systematically manage for results. The ongoing upgrading and further implementation of the Global Management System at the WHO may offer such an opportunity.

1.0 Introduction

1.1 Background

This report presents the results of a review of the development effectiveness of the United Nations’ (UN) World Health Organization (WHO). The report utilizes a common approach and methodology developed under the guidance of the Organisation for Economic Co-operation and Development’s (OECD) Development Assistance Committee (DAC) Network on Development Evaluation (DAC-EVALNET). Two pilot tests, on the WHO and the Asian Development Bank, were conducted in 2010 during the development phase of the common approach and methodology. The report relies, therefore, on the pilot test analysis of evaluation reports published by the WHO’s Office of Internal Oversight Services, supplemented with a review of WHO and CIDA corporate documents, and consultation with the CIDA manager responsible for managing relations with the WHO.

The method uses a common set of assessment criteria derived from the DAC’s evaluation criteria (Annex 1). The overall approach and methodologyFootnote 3 were endorsed by the members of the DAC-EVALNET as an acceptable approach for assessing the development effectiveness of multilateral organizations in June 2011. For simplicity, development effectiveness is hereafter referred to as effectiveness in this report.

From its beginnings, the process of developing and implementing the reviews of development effectiveness has been coordinated with the work of the Multilateral Organization Performance Assessment Network (MOPAN). By focusing on development effectiveness and carefully selecting assessment criteria, the reviews seek to avoid duplication or overlap with the MOPAN process. Normal practice has been to conduct such a review in the same year as a MOPAN survey for any given multilateral organization. A MOPAN survey of the WHO was conducted in 2010 in parallel with this analysis.Footnote 4

1.2 Why Conduct this Review?

The review provides Canada and other stakeholders an independent, evidence-based assessment of the development effectiveness of WHO programs for use by Canada and other stakeholders. In addition, the review satisfies evaluation requirements for all programs established by the Government of Canada’s Policy on Evaluation.

The objectives of the review are:

- To provide the CIDA with evidence on the development effectiveness of the WHO that can be used to guide Canada’s present engagement with WHO;Footnote 5 and

- To provide evidence on development effectiveness, which can be used in the ongoing relationship between the Government of Canada and the WHO to ensure that Canada’s international development priorities are served by its investments.Footnote 6

Although this report is intended, in part, to support Canada’s accountability requirements within the Government of Canada, the results are expected to be useful to other bilateral stakeholders.

1.3 WHO: A Global Organization Committed to Working for Health

1.3.1 Background and Objectives

As the directing and coordinating authority on international health within the UN system, the WHO employs over 8,000 public health experts, including doctors, epidemiologists, scientists, managers, administrators and other professionals. These health experts work in 147 country offices, six regional offices and at the headquarters in Geneva.Footnote 7 The WHO’s membership includes 194 countries and two associate members (Puerto Rico and Tokelau). They meet annually at the World Health Assembly to set policy for the organization, approve the budget and, every five years, to appoint the Director-General. The World Health Assembly elects a 34-member Executive Board.

The WHO’s Eleventh General Programme of Work 2006–2015 defines the following core functions for the organization:

- providing leadership on matters critical to health and engaging in partnerships where joint action is needed;

- shaping the research agenda and stimulating the generation, translation and dissemination of valuable knowledge;

- setting norms and standards and promoting and monitoring their implementation;

- articulating ethical and evidence-based policy options;

- providing technical support, catalyzing change, and building sustainable institutional capacity; and

- monitoring the health situation and assessing health trends.

WHO also serves as the lead agency to coordinate international humanitarian responses in the Health cluster.Footnote 8 It hosts a number of independent programs and public private partnerships, including the Global Polio Eradication Initiative, the Stop TB Partnership, and the Partnership for Maternal Newborn and Child Health.Footnote 9

1.3.2 Strategic Plan

WHO’s Medium-Term Strategic Plan identifies 11 high-level strategic objectives for improving global health in the 2008 to 2013 period. It also includes two strategic objectives for improving the WHO’s performance.

The eleven strategic objectives in global health are:Footnote 10

- Reduce the burden of communicable diseases;

- Combat HIV/AIDS, tuberculosis and malaria;

- Prevent and reduce chronic non-communicable diseases;

- Improve maternal and child health, sexual and reproductive health, and promote healthy aging;

- Reduce the health consequences of crises and disasters;

- Prevent and reduce risk factors for health, including tobacco, alcohol, drugs and obesity;

- Address social and economic determinants of health;

- Promote a healthier environment;

- Improve nutrition, food safety and food security;

- Improve health services and systems; and

- Ensure improved access, quality and use of medical products and technologies. The Medium-Term Strategic Plan also identified two objectives directed toward the WHO’s own roles and functions:

- Provide global health leadership in partnership with others; and,

- Develop the WHO as a learning organization.

1.3.3 Work and Geographic Coverage

The WHO is funded through both assessedFootnote 11 and voluntary contributions from member states. Foreign Affairs and International Trade Canada is responsible for Canada’s assessed contribution. Similarly to other UN organizations, the WHO prepares a biennium budget covering the two years of operations. The program budget for the 2010–2011 biennium was USD 4.54 billion, of which USD 945 million was assessed contributions.Footnote 12

Since the budget is comprised of both assessed and voluntary contributions, the actual funds available to the WHO for expenditure on a program or priority in any given year may be either more or less than budgeted (depending on the volume of voluntary contributions). Table 1 presents the approved budget amount, the actual funds reported as available over the biennium, and the amount spent.Footnote 13

| WHO Strategic Objectives | Approved Budget 2010–2011 | Funds Available at Dec. 31, 2011 | Expenditures at Dec. 31, 2011 | % of TotalExpenditures in 2011 |

|---|---|---|---|---|

| 14 Strategic Objective 13 covers core administrative functions such as planning, reporting, human resources management, financial management and information technology. | ||||

| 1. Communicable Diseases | 1,268 | 1,472 | 1,290 | 35% |

| 2. HIV/AIDS, Tuberculosis and Malaria | 634 | 535 | 446 | 12% |

| 3. Chronic Non-communicable Diseases | 146 | 112 | 98 | 3% |

| 4. Child, Adolescent, Mother Health and Aging | 333 | 222 | 190 | 5% |

| 5. Emergencies and Disasters | 364 | 393 | 312 | 8% |

| 6. Risk Factors for Health | 162 | 109 | 94 | 3% |

| 7. Social and Economic Determinants of Health | 114 | 42 | 37 | 1% |

| 8. Healthier Environment | 63 | 94 | 83 | 2% |

| 9. Nutrition and Food Safety | 120 | 70 | 62 | 2% |

| 10. Health Systems and Services | 474 | 348 | 298 | 8% |

| 11. Medical Products and Technologies | 115 | 158 | 137 | 4% |

| 12. Global Health Leadership | 223 | 269 | 264 | 7% |

| 13. WHO as a Learning Organization14 | 524 | 420 | 405 | 11% |

| TOTAL | 4,540 | 4,244 | 3,717 | 100% |

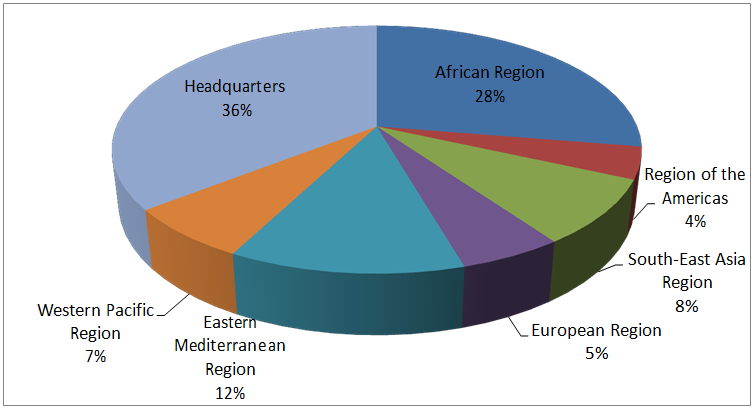

Figure 1: Regional Share of WHO Expenditures in 2010–2011

1.3.4 Evaluation and Results Reporting

Evaluation

Evaluation at the WHO is a decentralized responsibility with most evaluations being commissioned and managed by individual technical programs and regional and country offices. The Office of Internal Oversight Services (OIOS) reports directly to the Director General and conducts internal audits, investigates alleged wrongdoing, and implements the policy on programme evaluation. The OIOS has acted as the custodian of the evaluation function.

When the pilot test was carried out in 2010, evaluations commissioned by WHO were not published either as paper documents or online. Therefore, a request was made to the OIOS to identify and source the evaluations for the pilot test. The OIOS indicated that in most cases, evaluation reports were owned by both WHO and the countries covered in the evaluation. Copies would need to be requested from the WHO Country Offices in question. No central repository of published evaluations was available in either electronic or hard copy form.

OIOS staff indicated in May 2010 that a major review of the evaluation policy at the WHO was underway and that a new policy and structure would be forthcoming.

The recasting and restructuring of WHO evaluation policy has now become one element in a major, coordinated initiative to reform the management of the organization. In preparation for a Special Executive Board Meeting in November 2011, the WHO Secretariat produced a five-element proposal for managerial reformsFootnote 15that covered the areas of:

- Organizational effectiveness, alignment and efficiency;

- Improved human resources policies and management;

- Enhanced results-based planning, management and accountability;

- Strengthened financing of the organization, with a corporate approach to resource mobilization; and,

- Strategic communications framework.

Under the heading of results-based planning, management and accountability, the proposed managerial reforms aimed to delineate “an approach to independent evaluation.”

The new evaluation policy was officially adopted by the Executive Board at its 131st session, held in May 2012.Footnote 16 The policy aims to: foster a culture and use of evaluation across the WHO; provide a consolidated institutional framework for evaluation at the three levels of the WHO; and facilitate conformity with best practice and with the norms and standards of the United Nations Evaluation Group.

The new policyFootnote 17 opts to strengthen the OIOS rather than create a new evaluation unit reporting directly to the Board. The policy also delineates in considerable detail the roles and responsibilities of the Executive Board, the newly created Global Working Group on Evaluation, and the OIOS with regard to evaluations. It also describes the principles that guide all evaluation work at the WHO.

The most important new duties of the OIOS include: preparing an annual organization-wide work-plan for evaluations; maintaining an online inventory of evaluations performed at the WHO; ensuring that evaluation reports conform to the requirements of the policy; maintaining a system to track the implementation of management responses to evaluations; and submitting an annual report on evaluation activities to the Executive Board through the Director General.

It remains to be seen how these proposals will be implemented and what effect they will have on the strength of the evaluation function at the WHO. The introduction to the proposed new policy describes the challenge facing the WHO:Footnote 18

“From a broader institutional perspective, it [WHO] has been less successful in fostering an evaluation culture, developing evaluation capacity at all levels of the organization and in promulgating participatory approaches to evaluations. The causes for this include institutional arrangements for the evaluation function (including a lack of a direct mechanism for oversight by the governing bodies) and the absence of an effective budgetary allocation mechanism to resource the evaluation function.”

The WHO has not yet been the subject of a DAC/UNEG Professional Peer Review of the Evaluation Function and the review team did not undertake such a review. However, the review team conducted its own quality review of the evaluations for inclusion in this report.

The results of the review team’s quality analysis were mixed, with 52% of reviewed evaluations scoring 30 points or more, and 24% receiving scores of less than 19 out of a possible 48. For quality criteria I, “evaluation findings are relevant and evidence based,” only three evaluation reports out of 25 scored less than three from a possible score of five (see Annex 3 for details of the review methodology and Annex 4 for the evaluation quality scoring grid). All evaluations were retained for the review since scores overall were judged reasonable.

WHO evaluation reports were often operationally and technically focused; that is, they were concerned with how well a given service delivery method, surveillance system, or even the introduction of a new vaccine was implemented rather than the resulting changes for the target or beneficiary group. This is a significant problem for assessing evaluation quality because these studies (while often well designed within their own parameters) often lacked key components of a quality evaluation (such as the effects on the target or beneficiary group) when assessed against the quality criteria derived from UNEG standards.

Although no evaluations were screened out due to quality concerns, evaluation reports do not address all the criteria identified essential elements for effective development. As a result, each sub-criteria examined below is addressed by fewer than 25 evaluations. This review examines the data available on each criterion before presenting results, and does not present results for some criteria.

Results Reporting

The WHO does not prepare an annual report on development effectiveness or an annual summary of the results of evaluations. It does provide, however, extensive reporting on the global and regional situation in health to the World Health Assembly each year. It also presents special reports on specific global topics and challenges in public health on an annual basis. Every two years, the WHO publishes a Performance Assessment Report, which describes the extent to which the WHO has achieved its strategic objectives and sub-objectives in the previous biennium.

The Global Management System

For some time, the WHO has been in the process of implementing a system of results monitoring and reporting based on Oracle software. This Global Management System was in development as early as 2008 and is currently being upgraded after a lengthy implementation phase. The Global Management System has as one goal the alignment of program and project planning, implementation and monitoring with agency strategic objectives at a corporate, regional and national level.

Since 2008, the WHO has made an effort to implement the System in each of its regions and by January 2011 was able to report to its Executive Board that it had made “considerable progress” in implementing the system in five regions and at headquarters. The Executive Board (EB128/3) welcomed the reported progress but expressed concern that the Region of the Americas/PAHO had chosen not to implement the system.

In May 2011, the Secretariat at the WHO reported to the Programme, Budget and Administration Committee of the Executive Board on progress in implementing the Global Management System. The Committee in its report to the Executive Board noted that:Footnote 19

“The Global Management System had been successfully rolled out in the Africa Region. Questions were asked regarding the planned upgrade of the System and its related cost as well as the savings that will result from its implementation. Queries were also raised with regard to harmonization between the Global Management System and the new system in the Region of the Americas/PAHO.”

Available documentation on the System suggests its primary focus is still finance, administration, resource allocation planning, and human resources management. It is not yet clear if the System, as implemented, will effectively strengthen the results management and reporting system at the WHO.

At its Special Session on WHO reform in November 2011, the Executive Board welcomed the Director General’s proposals on managerial reform and requested that these proposals be taken forward in several areas, including the improvement of monitoring and reporting.Footnote 20 As already noted, one consequence of this request was the proposal for a new policy on evaluation, which was officially adopted by the Executive Board in May 2012. It is not yet clear whether this will include an effort to strengthen reporting on the development effectiveness of WHO programs, beyond that expected from the full implementation of the Global Management System.

Finally, it should be noted that the WHO published a performance assessment report in May 2012 to track indicators to measure progress toward the WHO’s strategic objectives and sub-objectives over the previous biennium.Footnote 21 A similar report was published in 2010. While the reports provide only global (or sometimes regional) information and do not describe the methodology used to track and verify indicators, they represent an excellent step toward reporting on the WHO’s performance.

2.0 Methodology

This section describes briefly the main elements of the methodology used for the review. A more detailed description of the methodology is presented in Annex 3.

2.1 Rationale

As an important United Nations (UN) Organization, the WHO was chosen for the pilot test of the common approach, together with the Asian Development Bank (a Multilateral Development Bank). The selection of the WHO allowed for testing the approach on a specialized agency of the UN with a strong social mandate. DAC-EVALNET members also expressed considerable interest in an effectiveness review of the WHO as an organization critical to efforts to achieving the health-related Millennium Development Goals (MDGs).

The term “common approach” describes the use of a standard methodology, as implemented in this review, to assess consistently the (development) effectiveness of multilateral organizations. It offers a rapid and cost effective way to assess effectiveness relative to a more time-consuming and costly joint evaluation.Footnote 22 The approach was developed to fill an information gap regarding the effectiveness of multilateral organizations. Although these organizations produce annual reports to their management and/or boards, bilateral shareholders were not receiving a comprehensive overview of the organizations’ performance on the ground. The Multilateral Organization Performance Assessment Network (MOPAN) seeks to address this issue through organizational effectiveness assessments. This approach complements MOPAN’s assessments.

The approach suggests conducting a review based on the organization’s own evaluation reports when two specific conditions exist:Footnote 23

- There is a need for field-tested and evidence-based information on the effectiveness of the multilateral organization.

- The multilateral organization under review has an evaluation function that produces an adequate body of reliable and credible evaluation information that supports the use of a meta-evaluation methodology to synthesize an assessment of the organization’s effectiveness.

The WHO met one of the two requirements for successfully carrying out an effectiveness review at the time of the pilot test. There was a clear need for more field tested and evidence-based information on the effectiveness of WHO programming. Results for the second test were more marginal. The supply of reasonable quality evaluation reports available at the time of the pilot test was limited, with only 25 such evaluations provided by the WHO over the 2007–2010 period to the pilot test team. The review was completed because these 25 evaluations were able to address moderately four of the six main criteria used to assess effectiveness. However, this narrow supply of reasonable evaluations limits the extent to which the results can be generalized across the organization.

2.2 Scope

The sample of 25 evaluations available for this review of the WHO provides limited coverage of the over 4.5 billion USD in programming budget available over the 2010–2011 biennium. It is difficult to estimate the level of coverage provided because the evaluation reports often do not include data on the overall value of the programs under evaluation. Nonetheless, the evaluations provide coverage at the country, regional and global/thematic level, and there are some interesting points of congruence between the sample and the profile of the WHO budget.

- Communicable Diseases (strategic objective 1): 8 of the 25 evaluations deal with the implementation of Extended Programs of Immunization in a range of countries (Central African Republic, the Democratic Republic of Congo, Cameroon, Vietnam, Sierra Leone, Zambia and the Philippines). These programs directly contribute to the most significant WHO strategic objective in dollar terms.

- Emergencies and Disasters (strategic objective 5): 3 of the 25 evaluations deal with Health Action in Crisis at the regional or country level: 1 for Africa, 1 for Myanmar, and 1 for Palestine. (In addition, a program evaluation of Health Action in Crisis is included in the global category below.) These programs contribute to the third-largest strategic objective in terms of funding.

- A significant number of the evaluations reviewed are global or organizational in scope.

They include:- Evaluation of the Making Pregnancy Safer Department (2010);

- Independent evaluation of major barriers to interrupting Poliovirus transmission (2009);

- Independent Evaluation of the Stop TB Partnership (2008);

- Review of the Nutrition Programmes of the WHO in the context of current global challenges and the international nutrition architecture (2008);

- Assessment of the Implementation, Impact and Process of WHO Medicines Strategy (2007);

- Health Actions in Crisis Institutional Building Program Evaluation (2007);

- Programmatic Evaluation of Selected Aspects of the Public Health and Environment (PHE) Department (2007); and

- Thematic Evaluation of the WHO’s Work with Collaborating Centres (2007).

The evaluations covered in this review were all produced by the WHO in the period from early 2007 to mid-2010 when the review was carried out (Annex 3). While some covered programming periods before 2007, most of the WHO program activities covered in the reviewed evaluations will have occurred between 2007 and 2010. The review team also analyzed selected WHO documents published in 2011 and early 2012 to provide an update to some of the findings of the reviewed evaluations.

In summary, while the list of suitable evaluations for review obtained from the organization by the pilot test team cannot be easily compared to the geographic and programmatic distribution of activities, it does provide at least a partial body of field-tested evaluation material on effectiveness. For that reason (and to learn what lessons could be drawn from the experience of conducting the study) the team proceeded with the pilot test effectiveness review of the WHO.

In addition to the 25 evaluation reports, the review examined relevant WHO policy and reporting documents, such as the reports of the Programme, Budget and Administration Committee to the Executive Board, Reports on WHO Reform by the Director-General, Evaluation Policy Documents, Annual Reports and the Interim Assessment of the Medium-Term Strategic Plan (see Annex 6). These documents allowed the review team to assess the ongoing evolution of evaluation and results reporting at the WHO and to put in context the findings reported in the evaluation reports.

The review team also carried out an interview with staff of the Office of Internal Oversight Services (OIOS) at the WHO to understand better the universe of available WHO evaluation reports and to put in context the changing situation of the evaluation function. Finally, the review team interviewed the CIDA manager most directly responsible for the ongoing relationship between CIDA and the WHO in order to better assess the organization’s contribution to Canada’s international development priorities.

2.3 Criteria

The methodology does not rely on a particular definition of (development) effectiveness. The Management Group and the Task Team created by the DAC-EVALNET to develop the methodology had previously considered whether an explicit definition of effectiveness was needed. In the absence of an agreed upon definition of effectiveness, the methodology focuses on some of the essential characteristics of developmentally effective multilateral organization programming, as described below:

- Relevance of interventions: The programming is relevant to the needs of target group members;

- Achievement of Development Objectives and Expected Results: Programming contributes to the achievement of development objectives and expected development results at the national and local levels in developing countries;

- Sustainability of Results/Benefits: The benefits experienced by target group members and the development results achieved are sustainable in the future;

- Efficiency: Programming is delivered in a cost-efficient manner;

- Crosscutting Themes (Environmental Sustainability and Gender Equality): Programming is inclusive in that it would support gender equality and would be environmentally sustainable (thereby not compromising the development prospects in the future); and

- Using Evaluation and Monitoring to Improve Effectiveness: Programming enables effective development by allowing participating and supporting organizations to learn from experience and uses performance management and accountability tools, such as evaluation and monitoring, to improve effectiveness over time.

The review methodology therefore involves a systematic and structured meta-synthesis of the findings of WHO evaluations, as they relate to these six main criteria and 18 sub-criteria that are considered essential elements of effective development (Annex 5). The main criteria and sub-criteria are derived from the DAC evaluation criteria.

2.4 Limitations

As with any meta-evaluation, there are methodological challenges that limit the findings. For this review, the limitations include: sampling bias; the challenge of ensuring adequate coverage of the criteria used; and problems with the classification of evaluation findings.

The major limitation to this review of the WHO has been the number of evaluation reports available at the central OIOS and made available to the review team in 2010 (covering the period 2007 to 2010). The set of available and valid evaluation reports does not provide, on balance, enough coverage of WHO programs and activities in the period to allow for generalization of the results to WHO programming as a whole. The 25 available evaluation reports do, however, provide insights into the development effectiveness of WHO programs evaluated during the period.

A further limitation arises from the fact that many of the 25 evaluations did not address some of the sub-criteria used to assess effectiveness. Because of the limitations arising from the small number of evaluations available and the lack of coverage of some sub-criteria, findings are reported below for only those criteria where coverage was rated either strong or moderate.

3.0 Findings on the Development Effectiveness of WHO

Insufficient evidence available to make conclusions about WHO

The major limitation to this review was that only 25 evaluation reports were available at the central OIOS and made available to the review team. This small sample does not provide enough coverage of WHO programs and activities to allow for generalization of results to the WHO as a whole.

The limited number of evaluation reports also did not allow reviewers to control for selection bias in the evaluation sample. This challenge is compounded by the fact that evaluation reports did not always report the programme budget that was evaluated.

Finally, many of the available evaluations did not address the sub-criteria used in this review to assess effectiveness, limiting the amount of information this review is able to report.

Taken together, these limitations mean that there is insufficient information available to make conclusions about the WHO’s development effectiveness. However, in the interest of providing useful, synthesized information, some findings are presented below.

WHO’s 2012 evaluation policy

An analysis of the 2012 WHO evaluation policy (Section 3.6.4) indicates that while the approval of an evaluation policy represents a positive step, gaps remain in the policy regarding the planning, prioritizing, budgeting and disclosure of WHO evaluations.

A 2012 United Nations Joint Inspection Unit review also raises concerns about independence and credibility of WHO evaluations, suggests that the WHO should have a stronger central evaluation capacity, and recommends that a peer review on the evaluation function be conducted by the United Nations Evaluation Group and be presented to the WHO Executive Board by 2014.

Observations on Development Effectiveness of the WHO

This section presents the results of the development effectiveness review as they relate to the six main criteria and their associated sub-criteria (Table 2 and Annex 5). In particular, Table 2 below describes the ratings assigned by the review team of “satisfactory” or “unsatisfactory” for each of the six major criteria and their associated sub-criteria. The table also presents the numbers of evaluations that addressed each sub-criterion (represented by the letter a).Footnote 24

No results are provided for sub-criteria addressed in less than 10 evaluations. Where coverage for a given sub-criterion was strong (that is, addressed by 18–25 evaluation reports), or moderate (addressed by 10–17 evaluation reports), results on effectiveness are presented.

Each of the following sections begins with a summary of the coverage and key findings, and follows with the main factors contributing to these results. A quantification of how many evaluations identified a particular factor describes the importance of positive and negative factors contributing to results under each assessed criteria.

| Sub-criteria | a* | Coverage Level** | Evaluations Rated Satisfactory (%)*** | Evaluation Rated Unsatisfactory (%)*** |

|---|---|---|---|---|

*a = number of evaluations addressing the given sub-criterion | ||||

| Relevance of interventions | ||||

| 1.1 Programs are suited to the needs of target group members | 18 | Strong | 89% | 11% |

| 1.2 Programs are aligned with national development goals | 12 | Moderate | 100% | 0% |

| 1.3 Effective partnerships with governments | 18 | Strong | 61% | 39% |

| 1.4 Program objectives remain valid | 21 | Strong | 100% | 0% |

| 1.5 Program activities are consistent with program goals | 20 | Strong | 60% | 40% |

| Achieving Development Objectives and Expected Results | ||||

| 2.1 Programs and projects achieve stated objectives | 21 | Strong | 71% | 29% |

| 2.2 Positive benefits for target group members | 14 | Moderate | 64% | 36% |

| 2.3 Substantial numbers of beneficiaries | 8 | Weak | N/A | N/A |

| Sustainability of Results/Benefits | ||||

| 3.1 Program benefits are likely to continue | 11 | Moderate | 73% | 27% |

| 3.2 Programs support institutional capacity for sustainability | 16 | Moderate | 37% | 63% |

| Efficiency | ||||

| 4.1 Programs evaluated as cost efficient | 9 | Weak | N/A | N/A |

| 4.2 Program implementation and objectives achieved on time | 5 | Weak | N/A | N/A |

| Crosscutting Themes: Inclusive Development Which can be Sustained (Gender Equality and Environmental Sustainability | ||||

| 5.1 Programs effectively address gender equality | 0 | Weak | N/A | N/A |

| 5.2 Changes are environmentally sustainable | 1 | Weak | N/A | N/A |

| Using Evaluation and Monitoring to Improve Development Effectiveness | ||||

| 6.1 Systems and processes for evaluation are effective | 16 | Moderate | 44% | 56% |

| 6.2 Systems and processes for monitoring are effective | 19 | Strong | 42% | 58% |

| 6.3 Systems and processes for RBM are effective | 3 | Weak | N/A | N/A |

6.4 Evaluation results used to improve development effectiveness | 9 | Weak | N/A | N/A |

3.1 WHO programs appear relevant to stakeholder needs and national priorities

3.1.1 Coverage of Sub-criteria

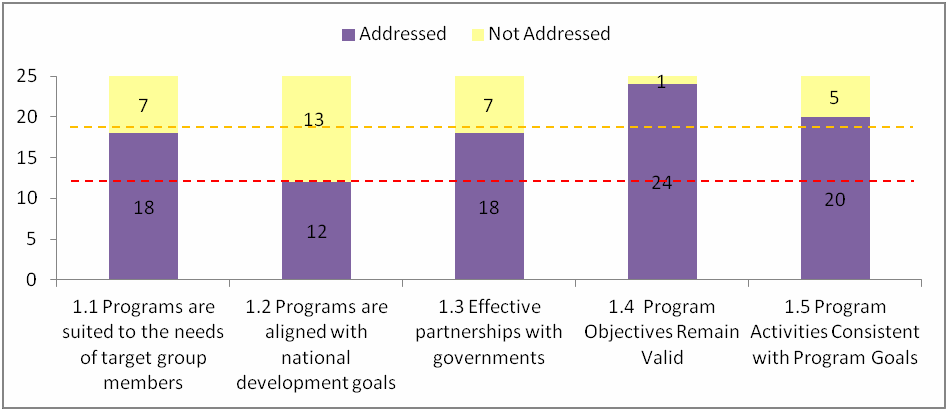

As demonstrated in Figure 2, the evaluations reviewed generally addressed the topic of relevance, with four of five sub-criteria (1.1, 1.3, 1.4 and 1.5) rated strong in coverage. Coverage in one sub-criterion (1.2) was rated moderate, as it was addressed in 12 evaluations.

Figure 2: Number of Evaluations Addressing Sub-criteria for Relevance

3.1.2 Key Findings

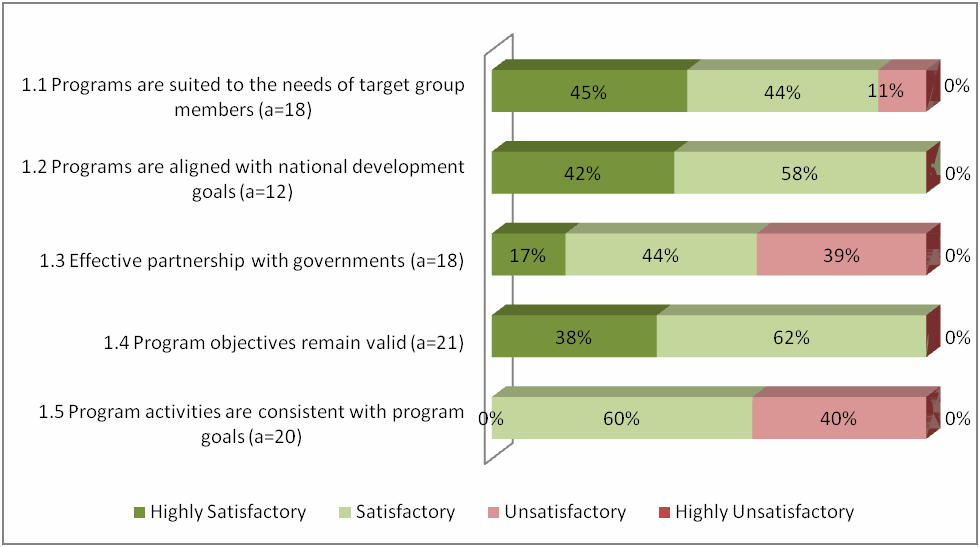

In summary, the evaluations reviewed rate WHO supported projects and programs high on scales of relevance. In particular, the programs evaluated are well suited to the needs of target group members and aligned with national priorities, and their objectives remain valid over time, as described in Figure 3 below.

On the question of whether or not WHO-supported programs and projects are suited to the needs of target group members (sub-criterion 1.1), the review found that 16 of 18 evaluations reports that addressed the criterion (89%) rated as reporting satisfactory or better findings and half of those rated as highly satisfactory. All 12 evaluations addressing the question of alignment of WHO-supported programs with national development goals and priorities (sub- criterion 1.2) were rated satisfactory or better.

More evaluations considered sub-criterion 1.3, the effectiveness of partnerships with all levels of government, with 11 of the 18 evaluations (61%) rated satisfactory or better. On the other hand, 7 evaluations (39%) were rated as unsatisfactory.

All 20 evaluations that addressed sub-criterion 1.4 on the continued validity of program objectives reported findings of satisfactory or better. The question of the fit between program objectives and program activities (sub-criterion 1.5) is not quite so clear-cut with only 12 of 20 evaluation reports (60%) reporting findings classified as satisfactory. This also reflects the technically focused nature of some WHO evaluations, which did not allow the review team to verify that the design of projects includes a systematic assessment of causal linkages between program activities and outputs and objectives achievement.

Findings from this review and from the 2010 MOPAN survey converge on the subject of relevance. Footnote 25 The WHO ranked at the top end of ‘adequate’ on the MOPAN indicator for ‘results developed in consultation with beneficiaries’ and ‘strong’ for the indicators ‘expected results consistent with national development strategies’ and ‘supporting national plans.’

Figure 3: Relevance of Interventions (Findings as percentage of number of evaluations addressing sub-criterion (= a), n = 25)

Highlight Box 1 below provides an illustration of successful results for criterion 1.2, “Programs are aligned with national development goals,” as remarked on in the evaluation of child health in Guyana.

Highlight Box 1

Aligning with national priorities in Guyana

A national strategic plan for the reduction of maternal and neonatal mortality 2006-2012 has been developed, which focuses on achieving the MDG mortality targets set in the UN General Assembly Special Session in 2000. Improvement of the health status of mothers and children is also given priority in the National Health Plan 2003-07, and the Poverty Reduction Strategy Paper (2002).

Review of Child Health in Guyana

3.1.3 Contributing Factors

Two important factors contributed to the positive evaluation findings in the area of relevance:

- The WHO’s experience in matching program design to the burden (morbidity and mortality) of disease in programming countries (11 evaluations);Footnote 26 and

- The use of consultations with key stakeholders at national and local levels to ensure program design matched user needs and national priorities.

Highlight Box 2 provides an example of how global consultations were used to help define the framework for the WHO’s intervention under Health Action in Crisis programming in crisis-affected countries.

A number of factors contributed to some of the unsatisfactory evaluation findings in the area of relevance:

- Unclear relations and responsibilities among participating government and non- government organizations (2 evaluations).

- Lack of coordination among supporting organizations (the WHO and the UN Office for Coordination of Humanitarian Affairs, for example), which made it difficult to coordinate with regional and local government partners (1 evaluation).

- Capacity weaknesses among both government and non-government partners (1 evaluation).

- Misunderstandings within the programs over the roles of different agencies and different units of government (1 evaluation).

Highlight Box 2

Consultations Used to Define Institutional Support for Health Action in Crisis (HAC)

In 2005, a consultative process involving over 300 stakeholders globally defined four core functions for WHO’s work in countries affected by crises. This framework was endorsed by the 2005 World Health Assembly resolution WHA58.1. The first core function was to promptly assess health needs of populations affected by crises. This was considered to be particularly well understood and implemented. The evaluation noted increased satisfaction with the improvement of WHO’s capacity for needs assessments and that it improved in all countries visited, although needs always exceeded resources.

Evaluation of HAC Institutions Building Program

3.2 The WHO appears to be effective in achieving its development objectives and expected results

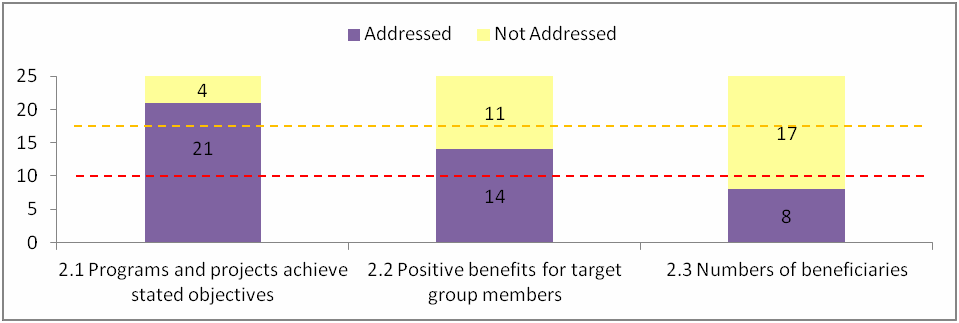

3.2.1 Coverage

Two of the three sub-criteria for objectives achievement and expected results have a strong (sub-criteria 2.1) or moderate level (sub-criteria 2.2) of coverage. As illustrated in Figure 4, coverage of sub-criteria 2.3 (programs and projects made differences for a substantial number of beneficiaries) was weak with only 8 evaluations addressing the number of program beneficiaries.

Figure 4: Number of Evaluations Addressing Sub-criteria for Objectives Achievement

3.2.2 Key Findings

In summary, the evaluations reviewed indicate that WHO programs achieve their developmental objectives and that they result in benefits for the designated target group members.

Of 21 evaluation reports that addressed sub-criterion 2.1, “Programs and projects achieve stated objectives,” 15 (71.4%) reported findings rated as satisfactory while only 6 (28.6%) were scored unsatisfactory. WHO programs also resulted in benefits for target group members, as noted in the findings of 9 (64%) of the 14 evaluations that addressed sub-criterion 2.2.

Figure 5: Results for Objectives Achievement (Findings as percentage of number of evaluations addressing sub-criterion (= a), n = 25)

Highlight boxes 3 and 4 provide an illustration of how WHO programs achieve their development objectives. Highlight Box 3 reports that the WHO was able to play a neutral brokering role in order to provide leadership in the coordination of the UN Health Cluster during emergency operations in Africa. Highlight Box 4 provides an example of WHO programming contributing to positive outcomes in newborn and child health in Cambodia.

Highlight Box 3

WHO Health Action in Crisis (HAC) in Africa

The evaluation found evidence that WHO is able to put the neutral brokering role in practice without undermining its organizational mandate. The evaluation confirmed that WHO can implement the leadership role for the coordination of the Health Cluster. Country Offices provided good support to partners with regard to needs assessments, health outcome and health services surveys, and providing regular disease surveillance data.

Evaluation of HAC’s Work in Africa

Highlight Box 4

Contributing to Newborn and Child Health in Cambodia

Overall neonatal and child mortality rates fell between 1996–2000 and 2001–2005. Improvements have been noted in a number of areas, including: neonates protected against tetanus at birth; neonates and mothers receiving early postnatal care contacts; initiation of early breastfeeding; exclusive breastfeeding to six months; living in households using iodized salt; and vaccination coverage. Improvements are needed in other areas, including: antenatal care coverage and skilled attendance at birth.

Review of Newborn and Child Health Program in Cambodia

3.2.3 Contributing Factors

Two common factors were noted in the evaluations as contributing to the achievement of development objectives in WHO programs:

- Strong technical elements in program design which matched the program intervention to the burden of disease (11 evaluations); and

- High levels of national and local ownership resulting from consultative processes of program development (4 evaluations).

Where evaluations reported that benefits for target group members were missing or limited in scope they noted:

- Weak or delayed implementation (2 evaluations);

- Lack of adequate financing and human resources invested in the program (1 evaluation); and

- Delays in the expected increase in donor funding (1 evaluation).

3.3 Benefits of WHO programs appear to be sustainable but there are challenges in sustaining the capacity of partners

3.3.1 Coverage of Sub-criteria

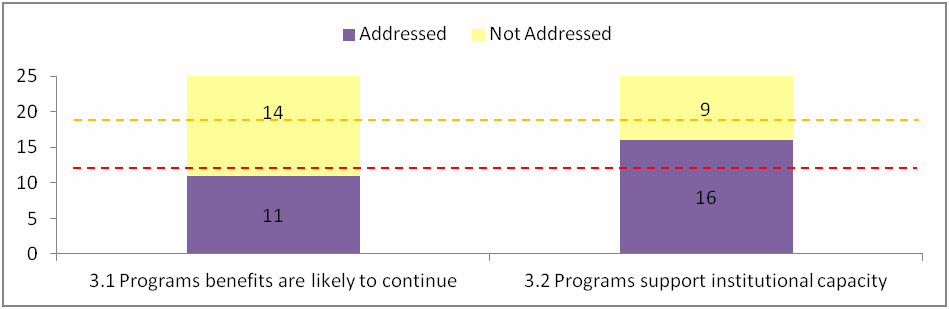

Evaluations provided a moderate level of coverage for both the sub-criteria for assessing sustainability. Sub-criterion 3.1, “Program benefits are likely to continue,” was addressed by 11 evaluation reports, while sub-criterion 3.2, “Programs support institutional capacity for sustainability,” was addressed by 16 of 25 evaluation reports.

Figure 6: Number of Evaluations Addressing Sub-criteria for Sustainability

3.3.2 Key Findings

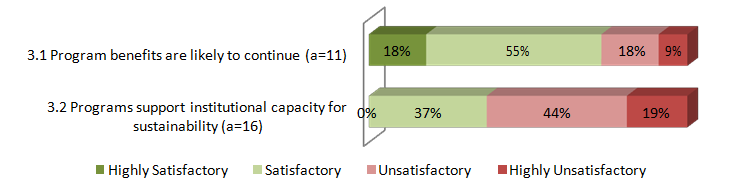

The findings regarding sustainability reflect a mixed level of performance (Figure 7). Evaluations reviewed indicate that the benefits of WHO programs are sustainable but that there are important challenges to ensuring that the institutional arrangements for ongoing program delivery are sustainable. On sub-criterion 3.1, “Program benefits are likely to continue,” 8 of 11 evaluation reports (73%) reported findings of satisfactory or better. In contrast, for sub-criterion 3.2, “Programs support institutional capacity for sustainability,” only 37% of evaluations reported positive findings, with 10 (63%) of 16 evaluations classified as unsatisfactory or worse.

Figure 7: Sustainability of Results/Benefits (Findings as percentage of number of evaluations addressing sub-criterion (= a), n = 25)

3.3.3 Contributing Factors

Three factors were cited in evaluations as contributing to the sustainability of the results of WHO programming:

- Strong national and local ownership (4 evaluations);

- Consultative processes for identifying key health issues and agreeing on implementation arrangements for solutions (4 evaluations); and

- Use of local networks for sustaining the success of immunization program arrangements.

Two factors were identified as contributing to less than satisfactory results for sustainability:

- The absence of adequate and sustained financial resources from both government and donors to sustain program services at current levels (1 evaluation); and

- Problems in the disruption of WHO services to countries and areas in crisis (1 evaluation).

Highlight Box 5 provides an illustration of strong local institutional capacity and the use of networking to improve program effectiveness and sustainability.

Highlight Box 5

Contribution to Capacity Development

Vietnam’s EPI program and health system is well functioning and well positioned to meet these coming challenges...strong networks established between commune health centers and village health workers have been identified by this review to be a critical factor in immunization program success. The implementation of the school-based measles second dose program also testifies to the strength of local area institutional and social networks in facilitating access of the population to health care services.

Vietnam EPI Evaluation

3.4 WHO evaluations did not address efficiency

3.4.1 Coverage

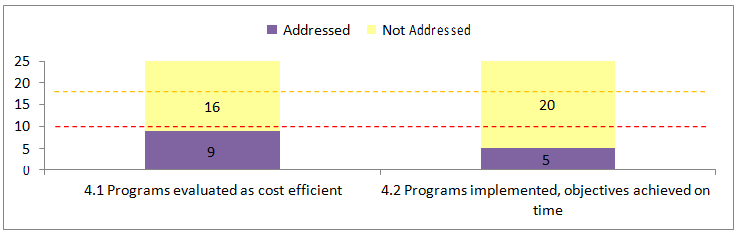

WHO evaluations generally did not address efficiency in the 2007 to 2010 time frame. For the two sub-criteria grouped under the overall heading of efficiency, the combination of a small sample size and few valid cases severely undercuts the validity of any general observation that can be made from the evaluations reviewed. Sub-criterion 4.1, “Programs evaluated as cost efficient,” was addressed in only nine evaluation reports (36% of the sample). Only five evaluations addressed sub-criterion 4.2, “Program implementation and objectives achieved on time.” Therefore, no results are presented for these sub-criteria.

MOPAN survey results and document review are not directly comparable with this review’s criteria of efficiency. MOPAN does measure timeliness of implementation, but not the timeliness of the achievement of objectives.

Figure 8: Number of Evaluations Addressing Sub-criteria for Efficiency

3.4.2 Contributing Factors

Those evaluation reports that did address these sub-criteria most often reported factors detracting from efficiency. A common feature of these findings was a link between delays in program implementation and increased costs. Factors contributing to unsatisfactory results in program efficiency include:

- Inefficient and time-consuming procurement practices (1 evaluation);

- Poor ongoing monitoring of expenditures (1 evaluation);

- Delays in disbursement of funds by partner governments (1 evaluation);

- Lack of adequate training for logistical officers (1 evaluation);

- Delays in the approval process for transfer of funds to other UN partners (1 evaluation);

- Poor financial information, especially on the costs of operations of local partner organizations (1 evaluation); and

- Delays in mobilizing resources, including contracted personnel (1 evaluation).

3.5 WHO evaluations did not address gender equality and environmental sustainability

3.5.1 Coverage

WHO evaluations did not regularly address effectiveness in supporting gender equality or environmental sustainability (Figure 9). Sub-criterion 5.1, “Programs effectively address gender equality,” was not addressed in any of the 25 evaluation reports reviewed. Sub-criterion 5.2, “Changes are environmentally sustainable,” was addressed in one evaluation report. Therefore, no results are presented for these sub-criteria.

The absence of gender equality as an issue in WHO evaluations represents a critical gap in effectiveness information for the organization.

Figure 9: Number of Evaluations Addressing Sub-criteria for Gender Equality and Environmental Sustainability

The absence of gender equality considerations in evaluations supplements the 2010 MOPAN study of the WHO, which rated integration of gender as strong in its document review but only adequate in survey responses. MOPAN noted “On WHO’s integration of gender equality and human rights-based approaches, divergent ratings between the document review and survey suggest that while WHO has the policy frameworks and guidance required in its documents, it may not yet be applying these consistently in its programming work at all levels of the organization.”Footnote 27

This review’s findings are in line with the WHO’s own 2011 report of the baseline assessment of the WHO Gender Strategy, which found that less than 5% of planning officers “strongly” integrated gender into the monitoring and evaluation phases of WHO programming.Footnote 28 On the crosscutting theme of the environment, MOPAN was more positive, noting that: “WHO’s attempts to mainstream environment in its programmatic work were seen as adequate by survey respondents and strong by the document review.” This review is unable to provide results on environmental integration.

3.6 Evaluations report weaknesses in systems for monitoring and evaluation

3.6.1 Coverage of Sub-criteria

Some care is required in interpreting the results reporting regarding the use of monitoring and evaluation to improve effectiveness since two of the four sub-criteria were rated weak in coverage (Figure 10). Sub-criterion 6.1, “systems and processes for evaluations are effective,” was addressed in 16 evaluation reports and rated moderate in coverage. Sub-criterion 6.2, “systems and processes for monitoring are effective,” was addressed in 19 evaluations and rated strong in coverage. The last two sub-criteria, “systems and processes for results-based management are effective” and “evaluation results used to improve development effectiveness,” were addressed in less than 10 evaluation reports and were both rated weak in coverage.

Figure 10: Number of Evaluations Addressing the Sub-criteria for Use of Evaluation to Improve Development Effectiveness

3.6.2 Key Findings

The WHO’s systems and processes for using monitoring and evaluation to improve effectiveness was assessed as unsatisfactory. Evaluation reports that addressed sub-criterion 6.1, “systems and processes for evaluations are effective,” often reported unsatisfactory findings, with only 7 of 16 evaluations (44%) producing findings classified as satisfactory or better. Similarly, only 8 of 19 evaluations (42%) reporting findings coded as satisfactory or better for sub-criterion 6.2, “systems and processes for monitoring are effective.”

Figure 11: Using Evaluation and Monitoring to Strengthen Development Effectiveness (Findings as percentage of number of evaluations addressing sub-criterion (= a), n = 25)

Findings from the MOPAN survey converge with findings of this review. The MOPAN report concluded, “the independence of the Office of Internal Oversight Services (OIOS) was considered adequate by survey respondents and the review of documents.” However, other assessment findings suggest that the WHO’s evaluation function should be strengthened: evaluation coverage is limited and difficult to ascertain because of the decentralised nature of evaluation; there is no repository of evaluations (although an inventory does exist) and evaluations are difficult to access through the WHO website.” Footnote 29

MOPAN survey results indicated the likelihood that programs would be subject to independent evaluation was near the bottom of the ‘adequate’ range. However, 40% of respondents answered ‘don’t know’ to this question, and the document review rated the WHO as ‘inadequate’.

MOPAN’s document review rated as ‘adequate’ adjustments to strategies and policies as well as to programming on the basis of performance information, but noted “Although there are periodic evaluations of WHO programs (which assess the outcomes of the WHO’s work along the lines of thematic, programmatic or country evaluations), the reports to the Executive Board do not seem to draw on the evaluation findings or recommendations.”Footnote 30 Similarly, MOPAN found only one concrete example of performance information leading to adjustment to programming.

3.6.3 Contributing Factors

Three factors were cited as contributing to positive results in relation to the strength of evaluation and monitoring systems.

- A tradition of joint review of WHO programs involving the WHO, host governments and other stakeholders (3 evaluations);

- The practice of conducting regular or mid-term evaluations of new programs such as the introduction of a new vaccine (3 evaluations); and

- The practice of independent external evaluations of large WHO-supported programs (2 evaluations).

The most frequent critique of evaluation systems and procedures was that the evaluation reports did not refer to similar evaluations of the same program in the past or plans for the future (4 evaluations). In general, the evaluation reports did not include information that would allow the reviewer to place this particular evaluation in the context of a wider system or process calling for systematic evaluation of the programs under review.

Other factors that contributed to less-than-satisfactory results for the strength of evaluation and monitoring systems include:

- Institutional weakness among partners and, more specifically, failure to staff designated monitoring and evaluation positions which are a feature of program design requirements (4 evaluations);

- Missing data or a failure to collect agreed-upon data on a regular and reliable basis (6 evaluations); and

- A sense among some WHO partners that data requirements are overly bureaucratic and that the data is not being used, so less effort is put into data collection (1 evaluation).

Highlight Box 6 provides an illustration of how lack of resources and weak commitment to the requirement for results reporting (seen as overly bureaucratic) have undermined the effectiveness of monitoring and evaluation.

Highlight Box 6

Lack of resources and commitment to evaluation and monitoring by collaborating centres

With few exceptions, the lack of systematic monitoring and evaluation is obvious. The reasons mentioned are lack of manpower and interest, ambiguity of responsible technical officers about their role, and uncertainty regarding the role of regional Collaborating Centre focal points. The annual report submitted by Collaborating Centres is often perceived as a bureaucratic formality, rather than a useful instrument to assess progress and improve collaboration, especially in the case of active networks that have active ongoing monitoring and reporting mechanisms.

Thematic Evaluation of WHO’s Work with Collaborating Centres

3.6.4 A look at WHO’s 2012 evaluation policy

A brief comparison of WHO’s 2012 evaluation policy to standards from the United Nations Evaluation Group highlights improvements and areas for continued attention at the WHO. The United Nations Evaluation Group is a network to bring together evaluation units in the UN system. Its standards for evaluation in the United Nations system describe, among other things, the expectations for evaluation policies in UN organizations. Table 3 compares the UNEG standard with the WHO’s new evaluation policy.

Table 3: Comparing United Nations Evaluation Group Standards to WHO Evaluation Policy

UNEG requirement:

Clear explanation of the concept and role of evaluation within the organization

Addressed by WHO evaluation policy?

Yes

UNEG requirement:

Clear definition of the roles and responsibilities of the evaluation professionals, senior management and programme managers

Addressed by WHO evaluation policy?

Yes, but not clearly—role of program managers described under utilization and follow-up section, with no clear accountability and oversight.

UNEG requirement:

An emphasis on the need for adherence to the organization’s evaluation guidelines

Addressed by WHO evaluation policy?

Partially—principles and norms of evaluation clearly identified, which contains a ‘quality’ sub-section with a reference “applicable guidelines.” Although the new policy replaces its previous evaluation guidelines, WHO does not appear to have new evaluations guidelines yet.

UNEG requirement:

Explanation of how evaluations are prioritized and planned