Archived information

Information identified as archived is provided for reference, research or recordkeeping purposes. It is not subject to the Government of Canada Web Standards and has not been altered or updated since it was archived. Please contact us to request a format other than those available.

Development Effectiveness Review of the United Nations Children's Fund (UNICEF)

Table of Contents

- Acknowledgments

- List of Acronyms and Abbreviations

- Executive Summary

- 1 Introduction

- 2 Methodology

- 3 Findings on UNICEF’s Development Effectiveness

- 4 Conclusions

- 5 Canada’s Relationship with UNICEF

Annexes

- Annex 1: Effectiveness Criteria

- Annex 2: Evaluation Sample

- Annex 3: Methodology

- Annex 4: Guide for Review Team to Classify Evaluation Findings

- Annex 5: Global Evaluations and UNICEF Documents

- Annex 6: Selected Results for Comparisons of Least-Developed and Middle-Income Countries and by Focus Area and Humanitarian Action

- Annex 7: UNICEF Staff Interviewed

- Annex 8: Data sources for Section 5.0

- Annex 9: Management Response from DFATD’s Global Issues and Development Branch

List of Tables

- Table 1: UNICEF Activities and Programs in Five Focus Areas of the Medium-Term Strategic Plan

- Table 2: Performance Indicators for the Evaluation Function at UNICEF 2011

- Table 3: Coverage and Summary Results for Each Sub-criterion

- Table 4: Canada’s Development Assistance to UNICEF: 2008-2009 to 2012-2013

- Table 5: Development of Populations of UNICEF Evaluations

- Table 6: Quality Review Grid

- Table 7: Results of Quality Review Scoring

- Table 8: Profile of Population and Sample of Evaluations, by Year

- Table 9: Profile of Sample of Evaluations, by Range of Years Covered

- Table 10: Profile of Sample of Evaluations, by Commissioning Office

- Table 11: Profile of Population and Sample of Evaluations, by Region

- Table 12: Profile of Population and Sample of Evaluations, by Country Classification

- Table 13: Profile of Population and Sample of Development Evaluations, by Funding and Country, by Funding for 2007 – 2011

- Table 14: Profile of Population and Sample of Humanitarian Evaluations, by Funding and Country, by Funding for 2007 - 2011

- Table 15: Profile of Population and Sample of Evaluations, by Medium-Term Strategic Plan Focus Area and Humanitarian Action

List of Figures

- Figure 1: UNICEF Expenditures, by Focus Area and Humanitarian Action, 2009-2011

- Figure 2: UNICEF Expenditures, by Type, 2009 - 2011

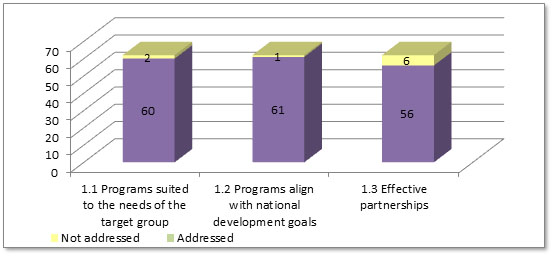

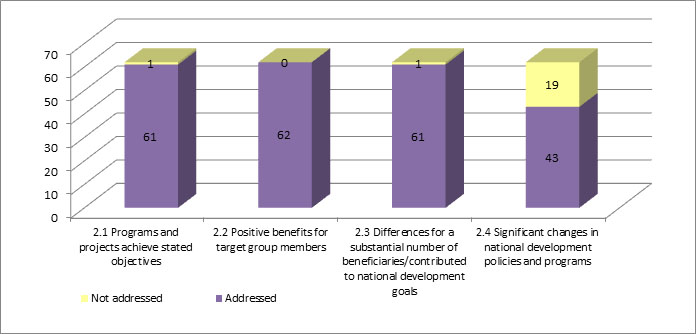

- Figure 3: Number of Evaluations Addressing Sub-criteria for Relevance

- Figure 4: Findings for Relevance

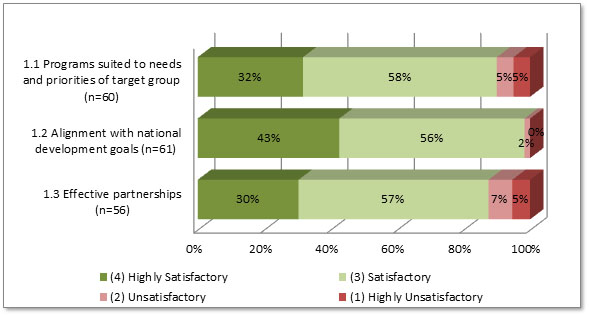

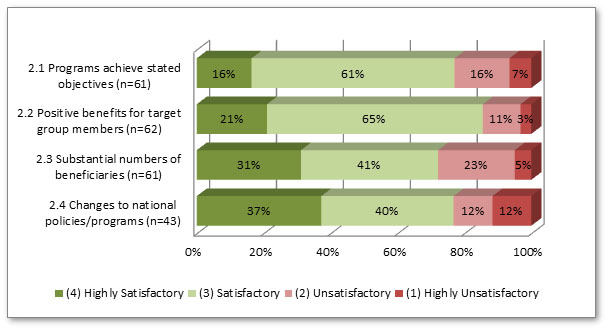

- Figure 5: Number of Evaluations Addressing Sub-criteria for Objectives Achievement

- Figure 6: Findings for Objectives Achievement

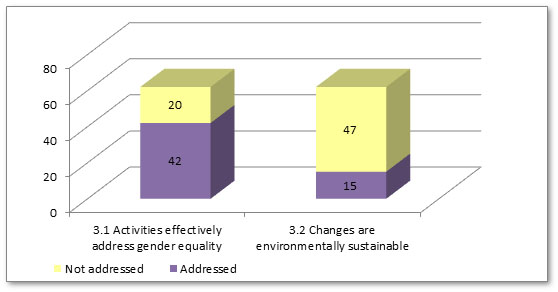

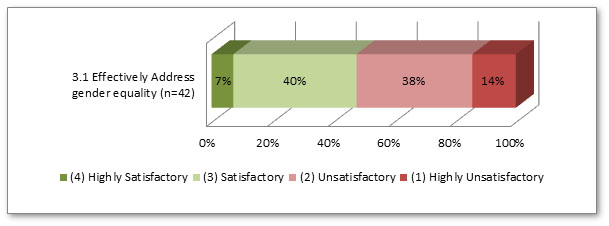

- Figure 7: Number of Evaluations Addressing Sub-criteria for Crosscutting Themes

- Figure 8: Findings for Effectiveness in Supporting Gender Equality

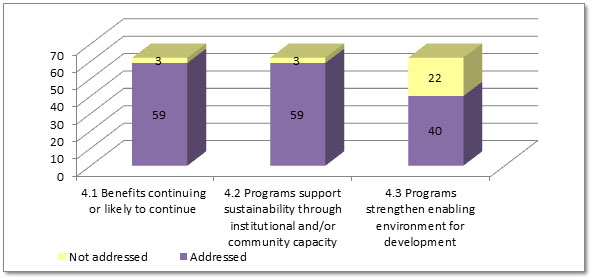

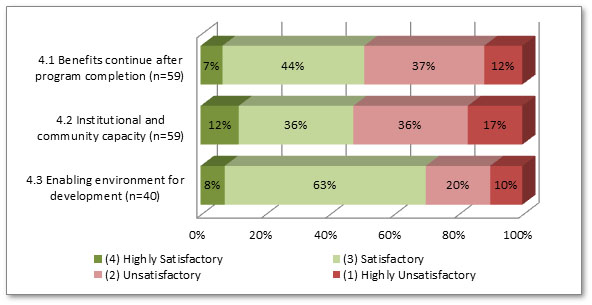

- Figure 9: Number of Evaluations Addressing Sub-criteria for Sustainability

- Figure 10: Findings for Sustainability

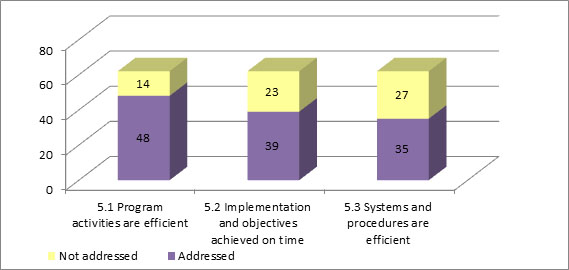

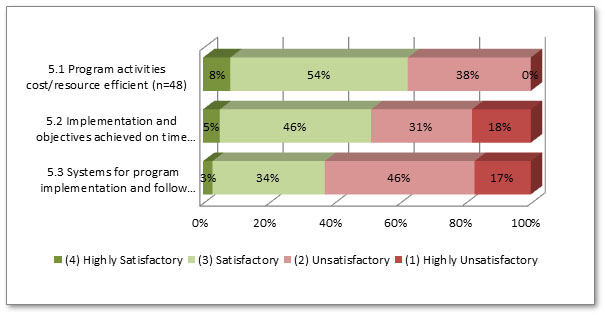

- Figure 11: Number of Evaluations Addressing Sub-criteria for Efficiency

- Figure 12: Findings on Efficiency

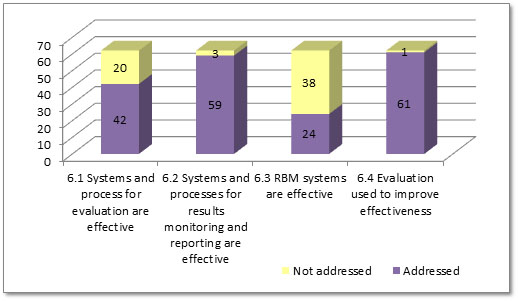

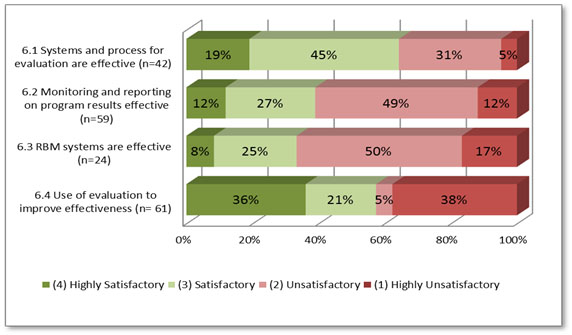

- Figure 13: Number of Evaluations Addressing Sub-criteria for Using Evaluation and Monitoring

- Figure 14: Findings for Using Evaluation and Monitoring

Acknowledgments

The Department of Foreign Affairs, Trade and Development's (DFATD) Development Evaluation Division wishes to thank all who have contributed to this review exercise. DFATD is grateful for the valued input and support received from all those involved in this collaborative effort.

Our thanks go first to those who collectively facilitated the donor-neutral assessment of the development effectiveness of UNICEF. The assessment was led by the Netherlands Ministry of Foreign Affairs under the leadership of Mr. Ted Kliest. A consulting team from Goss Gilroy Inc. conducted the analysis and writing of the donor-neutral assessment. The team included Mr. Ted Freeman, Ms. Sheila Dohoo Faure, Ms. Louise Mailloux, Ms. Tasha Truant and Mr. Robert Barnes. The donor-neutral assessment is published on the website of the Organization for Economic Cooperation and Development (OECD).

DFATD produced the chapter related to Canada's relationship with UNICEF. We wish to thank the United Nations, Commonwealth and Francophonie Program Team in the Global Issues and Development Branch at DFATD for their engagement in the review.

From DFATD's Development Evaluation Division, we wish to thank Ms. Lamia Naji, Junior Evaluation Officer, and Dr. Tricia Vanderkooy, Evaluation Manager, for conducting the assessment of Canada's engagement with UNICEF. The chapter on Canada's engagement with UNICEF was written by Ms. Naji, with editorial assistance from Dr. Vanderkooy. We also thank Mr. Andres Velez-Guerra, Team Leader and Mr. James Melanson, Evaluation Director, for overseeing the review.

Caroline Leclerc

Head of Development Evaluation

List of Acronyms and Abbreviations

- CCC

- Core Commitments to Children in Humanitarian Emergencies

- CIDA

- Canadian International Development Agency

- DAC

- Development Assistance Committee

- DAC-EVALNET

DFATD - DAC Network on Development Evaluation

Foreign Affairs, Trade and Development Canada - DFID

- Department for International Development (United Kingdom)

- GID

- Global Issues and Development Branch (DFATD)

- MFM

- Global Issues and Development Branch (DFATD)Footnote 1

- MOPAN

- Multilateral Organizations Performance and Assessment Network

- MoRES

- Monitoring Results for Equity System

- QCPR

- Quadrennial Comprehensive Policy Review

- UN

- United Nations

- UNAIDS

- Joint United Nations Programme on HIV/AIDS

- UNDAF

- United Nations Development Assistance Framework

- UNEG

- United Nations Evaluation Group

- UNICEF

- United Nations Children's Fund

- UNGEI

- United Nations Girls' Education Initiative

- VISION

- Virtual Integrated System of Information

- WEOG

- Western Europe and Others Group

Executive Summary

Background

This report presents the results of a review of the effectiveness of development and humanitarian programming supported by the United Nations Children's Fund (UNICEF). It was commissioned by the Netherlands Ministry of Foreign Affairs and carried out by a team from Goss Gilroy Inc. of Ottawa, CanadaFootnote 2. In addition, a review of Canada's engagement with UNICEF has been undertaken by Foreign Affairs, Trade and Development Canada (DFATD), and is presented in chapter 5.

The common approach and methodology for this review were developed under the guidance of the Organisation for Economic Co-operation and Development's Development Assistance Committee (DAC) Network on Development Evaluation (DAC-EVALNET) and relies on the content of published evaluation reports produced by UNICEF, supplemented with a review of UNICEF corporate documents and consultation with staff at UNICEF headquarters in New York.

Five UNICEF Program Focus Areas

- Young child survival and development

- Basic education and gender equality

- HIV/AIDS and children

- Child protection from violence, exploitation and abuse

- Policy advocacy and partnerships for children’s rights

Medium-Term Strategic Plan, 2006 – 2013.

Purpose

The purpose of the review is to provide an independent, evidence-based assessment of the humanitarian and development effectiveness of UNICEF operations (hereafter referred to as "programs") for use by all stakeholders. The approach is intended to work in a coordinated way with initiatives such as the DAC-EVALNET/United Nations Evaluation Group (UNEG) Peer Reviews of United Nations organization evaluation functions and assessments carried out by the Multilateral Organizations Performance and Assessment Network (MOPAN). It also recognizes that multilateral organizations continue to make improvements to strengthen their reporting on development effectiveness and should eventually be providing regular, evidence-based, field-tested reporting on effectiveness themselves.

Approach and Methodology

The review, carried out from October 2012 to May 2013, began with a preliminary review of UNICEF documents and the identification of the population of UNICEF evaluation reports found in UNICEF's Global Database and reports from its Global Evaluation Report Oversight System. Interviews were then conducted with evaluation and program staff at UNICEF headquarters in New York. In consultation with evaluation staff, the review team drew a sample of seventy UNICEF evaluation reports, published between 2009 and 2011, that was illustrative of UNICEF programming. Evaluations were selected to include development and humanitarian programming, programming from the various regions and types of countries in which UNICEF works and programming from each of the five focus areas in UNICEF's Medium-Term Strategic Plan. However, it should be noted that the population of evaluations identified at UNICEF under-represented humanitarian action and programming in some of the countries that received the largest amounts of development or humanitarian funding.

The quality of each evaluation was assessed against criteria derived from the UNEG Norms and Standards for evaluation. Since the sample did not include any evaluations found to be "not confident to act" by UNICEF's own Global Evaluation Report Oversight System, only four reports were excluded due to quality concerns. Four others were excluded, either because they were too narrowly focused, did not provide coverage of a minimum number of effectiveness criteria or duplicated evaluation results reported in other evaluations in the sample. As a result, 62 evaluation reports were retained for systematic rating in the review.

Each evaluation report was rated, using a four-point scale that ranged from Highly Unsatisfactory to Highly Satisfactory, on six key Development Effectiveness Criteria and nineteen sub-criteria. The review team also identified the factors contributing to both positive and negative findings for each of the six key criteria used to assess the development effectiveness as reported by the evaluations. The results of the meta-synthesis of evaluation findings were then summarized and presented to UNICEF staff prior to the development of this report.

Assessment Criteria

- Relevance of Interventions

- The Achievement of Humanitarian / Development Objectives and Expected Results

- Cross Cutting Themes (Environmental Sustainability and Gender Equality)

- Sustainability of Results/Benefits

- Efficiency

- Using Evaluation and Monitoring to Improve Humanitarian and Development Effectiveness

Coverage of the Assessment Criteria in Reviewed Evaluations

The review established ranges for assessing how well the sub-criteria were covered in the sixty-two evaluations, based on the number of evaluations that addressed each of the sub-criteria. Coverage could be strong, moderate or weak. The results for sub-criteria with weak coverage were not reported. The coverage was rated as weak for only two sub criteria: the extent to which program-supported changes are environmentally sustainable and the effectiveness of systems for results-based-management. The findings for these sub-criteria are not reported.

Limitation: Retrospective Nature of the Review

The methodology section of the report describes in more detail some limitations of the review related to sampling issues and coverage. It is worth noting here that a review of development effectiveness that relies mainly on published evaluation documents is inherently retrospective, rather than forward-looking. Some important issues identified in the evaluations reviewed have been or are already being addressed by UNICEF. As one prominent example, the results of recent UNICEF global evaluations have been used by Programme Division in the preparation of inputs to next strategic plan. Where this has been pointed out to the team by UNICEF staff, or where there are examples in the documents of UNICEF taking action, the actions are noted in this report.

Coverage Ratings

Strong coverage: Sub-criterion was addressed in 45 – 62 evaluations

Moderate coverage: 30 – 44 evaluations

Weak coverage: less than 30 evaluations

Findings of the Development Effectiveness Review

Relevance of UNICEF-Supported Programs

The relevance of UNICEF's programming is very well covered in the evaluations and the findings reflect that programming is highly relevant to the needs of the target groups. The vast majority of evaluations reported high suitability to target group needs, alignment with national development goals and effective partnerships. However, programming in middle-income countries was somewhat more likely to have a higher rating for effective partnerships than that in least-developed countries.

Alignment of UNICEF's programming with government priorities was the major factor contributing to the relevance of programming, particularly to the extent that programming was designed to support the implementation of public policies, support programming and capacity building in national governments or institutions and was based on partnerships with key stakeholders. When these conditions were not met, notably when there were gaps in UNICEF's partnerships with national government (often because government is not taking adequate ownership) and other UN agencies, this detracts from the relevance of programming.

Objectives Achievement

Objectives achievement is generally very well covered in the evaluations, with the exception of the sub-criterion related to addressing changes in national development policies and programs. The findings are also largely positive. Three-quarters of the evaluations reported positive findings on the extent to which UNICEF-supported programs achieve their stated objectives. Positive results were even stronger for the programs' ability to provide positive benefits for the target population, with nearly nine out of ten evaluations reporting satisfactory or better findings. Results were somewhat less positive on the scale of program benefits measured in terms of the number of program beneficiaries. Finally, three-quarters of the programs were rated positively for their ability to support positive changes in national policies and programs.

It was the high quality of program design (in terms of the focus, alignment and engagement of national partners) that contributed positively to objectives achievement. UNICEF's role in influencing national policy and the appropriateness of the scope and resources for programs also contributed positively to objectives achievement. However, the opposite was also found. Weaknesses in program design, inadequate budgets and insufficient outputs detracted from the achievement of objectives.

Gender Equality

The evaluations presented a challenging picture on the effectiveness of gender equality. Firstly, the coverage of the effectiveness of gender equality in the evaluations was not high. Secondly, fewer than half of the evaluations that did address gender equality contained findings of satisfactory or better for this sub-criterion. Considering that twenty evaluations did not address gender equality, another way of expressing the result would be to note that only one-third of evaluations reflected that the programs effectively addressed gender equality. This finding is surprising given that addressing gender equality represents a "foundation strategy" for UNICEF programming. In addition, it seems surprising that findings of effective gender equality were somewhat more likely to be reported in evaluations of programs in least-developed countries than in middle-income countries.

UNICEF has recently been engaged in efforts to improve effectiveness in the area of gender equality. In response to the 2008 Gender Evaluation, the agency launched the Strategic Priority Action Plan for Gender Equality Action Plan 2010-2012 with eight areas of organizational action identified. UNICEF also reports its intent to take visible action on gender equality in the development of its new strategic plan.

As with other sub-criteria, the presence of specific factors contributed to the effectiveness of gender equality; whereas the absence of these same factors detracted from effectiveness. The factor identified most often as contributing to positive results in gender equality was the inclusion of gender-specific objectives and targets. The most common factors detracting from gender equality effectiveness were the lack of any specific gender equality objectives and/or the lack of a gender perspective in program design and implementation.

Sustainability

Overall the results with respect to sustainability are mixed. Generally the coverage of the sustainability sub-criteria in the evaluations was good. About half the evaluations reflected positive ratings for the likelihood that benefits would continue and the support for sustainability through developing institutional and/or community capacity. Evaluations of programming in middle income countries appeared to be somewhat more likely to get positive ratings for institutional and/or community capacity building than those from least-developed countries. Three-quarters of the evaluation reports were more positive about the strengthening of the enabling environment for development. This may be explained by the fact that efforts to improve the enabling environment do not, in themselves, have to be ongoing activities and, as a result, it may be easier to achieve positive findings for this sub-criterion than for other sub-criteria, which require evidence of the long-term impacts.

Strong national government and community engagement contributed positively to sustainability. On the other hand, weaknesses in program design and implementation (notably the failure to plan for sustainability), the lack of adequate capacity at the national level and an ongoing dependence on donor support detracted from sustainability.

Efficiency

The coverage of efficiency in the evaluations was relatively good, but findings are mixed and require careful interpretation. The fact that two-thirds of the evaluations reported positive findings on the cost efficiency of programs represents a reasonably good result.

Nonetheless, it is a concern that, of the evaluations that addressed these sub-criteria, half reported negative findings on timeliness and two-thirds reported negative findings on the efficiency of administrative systems. Also worrying is the fact that about one in five reported a finding of highly unsatisfactory for both sub-criteria. However, since there may be a tendency to under-report findings with respect to timeliness and the efficiency of administrative systems when no problems are encountered at field level, the results may be biased toward negative findings.

Effective monitoring systems, the use of low-cost approaches to service delivery and strong financial planning and cash-flow management contributed to cost efficiency. On the other hand, the lack of regular and timely reporting of appropriate cost data detracted from cost efficiency. Some evaluations also noted that delays in program start-up and the supply of inputs was a factor that reduced cost efficiency.

Similar themes are reflected in the factors that contributed to the timeliness of, and the effectiveness of systems and procedures for, program implementation and follow-up. The strong management and programming capacity of UNICEF country and regional offices, timely coordination among partner organizations, effective program monitoring and regular supervision and effective cash flow management all contributed positively to a rapid respond to program demands. The factors that detract from timeliness are related to UNICEF's administrative systems and procedures, notably lengthy delays in procurement and rigid and cumbersome financial systems and procedures for funds disbursement delayed program implementation.

Using Evaluation and Monitoring to Improve Humanitarian and Development Effectiveness

Coverage for the sub-criteria regarding the use of monitoring and evaluation was strong and the results are somewhat positive. Between half and two-thirds of the evaluations received positive ratings for the effectiveness of systems and processes for evaluation, and for the use of evaluation to improve effectiveness. However, just over one-third of the evaluations got a positive rating for the effectiveness of systems and processes for results monitoring and reporting.

The evaluations identified few factors that contributed positively to the use of monitoring and evaluation results to improve development effectiveness, beyond the fact that being part of a broader evaluation process (either UNICEF's country program evaluations or a donor-led global evaluation) contributed to the use of monitoring and evaluation. The use of evaluation is supported by the increasing tendency to prepare evaluation management responses that include action plans for the implementation of evaluation recommendations. However, two factors detracted from the use of monitoring and evaluation: the lack of a clear results framework and inadequate or inappropriate indicators, and inadequate baseline information.

Since 2011, UNICEF has engaged in a very significant effort to strengthen program results definition, monitoring and reporting, as it developed and implemented the Monitoring Results for Equity System (MoRES). This system is to be subject to a formative evaluation to be carried out by UNICEF's Evaluation Office in 2013. In addition, UNICEF recently used existing global evaluation reports to inform the development of its upcoming strategic plan. These initiatives appear to contribute to addressing gaps in monitoring and evaluation that were identified in the current review of evaluations.

Conclusions

- UNICEF-supported programs are highly relevant to the needs of target group members and are supportive of the development plans and priorities of program countries. UNICEF has also had success in developing effective partnerships with government and non-governmental organizations, especially in responding to humanitarian situations. The relevance of UNICEF programming has also been supported by efforts to ensure alignment with key national development plans and policy documents.

- UNICEF has largely been effective in achieving the objectives of the development and humanitarian programs, in securing positive benefits for target group members and in supporting positive changes in national policies and programs. Where UNICEF programs have achieved success in objectives achievement, it has often been supported by high quality program design and by UNICEF's role in influencing national sector policies. On the other hand, when UNICEF-supported programs failed to meet their objectives, it was most often due to weaknesses in project design, often linked to unclear causal relationships and lack of a results orientation.

- These weaknesses in project design were contributing factors to mixed results with respect to sustainability. UNICEF achieves fairly positive ratings for its contributions to strengthening the enabling environment for development. However, the results for the likely continuation of results or the development of institutional and community capacity are not as good. A key factor to explain these results is the failure to plan for sustainability and integrate sustainability into program designs.

- UNICEF's performance with respect to gender equality is a serious concern. Coverage of gender equality in the evaluations was not strong and, for those that did address it, the results were weak. A major factor contributing to poor results was the lack of specific objectives regarding gender equality or the lack of a gender perspective in program design and implementation. Given the identification of gender equality as a "foundation strategy" at UNICEF, it is surprising that gender is adequately addressed in only two-thirds of the evaluations.

- There was insufficient coverage of environmental sustainability to warrant presentation of the results. It appears that environmental sustainability or the impact of UNICEF-supported programs on their environment is not addressed in most evaluations, although some evaluations of humanitarian action and programs in water, sanitation and hygiene did address the issue. Given increasing emphasis on programs to mitigate the effects of climate change in some UNICEF country programs, coverage of environmental sustainability may be expected to improve in the future.

- The results for efficiency of programming are mixed and the interpretation of the results is difficult, as efficiency is not covered systematically in all evaluations. It is likely that factors related specifically to timeliness and implementation systems are only addressed in evaluations if they are problematic and can help to explain weakness in objectives achievement. However, the sub-criterion related to cost efficiency was covered more systematically in the evaluations and showed somewhat positive results. However, this reflects ways in which programs have tried to reduce unit costs and increase the efficiency of resource use, rather than an analysis of overall program costs, as these costs were not identified in nearly half the evaluations. The factors that enhance cost efficiency include the establishment of effective monitoring systems to track costs and improve efficiency through lower delivery costs or unit prices and efforts to identify low-cost approaches to programming. However, where the results are not so positive, the detracting factor is most often the lack of appropriate cost data.

- The evaluations reflected somewhat positive findings with respect to the use of evaluation at UNICEF, but less so for monitoring and reporting. The use of evaluations is supported by an increasing tendency to prepare evaluation management responses that include action plans. There were insufficient ratings for the use of results-based management systems to report on the results. The lack of clear results frameworks and appropriate indicators and baseline information were factors that detracted from UNICEF's effective use of monitoring and evaluation systems. The current development and implementation of the Monitoring Results for Equity Systems (MoRES) represents a significant effort to address this issue.

- While the sample of evaluations reviewed reflects reasonably the population of UNICEF evaluations between 2009 and 2011, a review of the profile, by country and by type of programming, of the evaluations conducted suggests that UNICEF does not have adequate coverage of the programming in the countries that receive the largest amounts of both development and humanitarian funding. There is noticeably limited coverage of UNICEF's humanitarian action.

- UNICEF is investing considerable time and effort into the development of systems for results monitoring, which is being implemented at all levels of the organization and in using the results of global evaluations for strategic planning. These initiatives hold out the promise of strengthened results reporting which could negate the need for development effectiveness reviews of this type in the future. That could depend on how well the evaluation function is able to be incorporated into the system as a means of verifying UNICEF's contribution and testing the validity of theories of change.

UNICEF has undertaken a number of initiatives in the period following publication of the evaluations reviewed in order to address some of the issues reported here. Examples of the main initiatives include:

- The development and ongoing implementation of new systems for monitoring and reporting results;

- The continued development by the Evaluation Office of the Global Evaluation Report Oversight System (GEROS), coupled with efforts to improve evaluation coverage;

- Changes to operating procedures to reduce procedural bottlenecks;

- The development of the Strategic Priority for Gender Equality Action Plan; and,

- Efforts to use evaluation and research findings to inform and strengthen strategic planning.

While these have not been assessed, documents and interviews at UNICEF suggest that they represent a significant effort to respond to the issues identified. Their effectiveness will doubtless be the subject of future evaluations.

Canada's Relationship with UNICEF

- The final section of the report assesses Canada's engagement with UNICEF, including results of the institutional strategy for that engagement, managed by the Global Issues and Development Branch. This Canada-specific section fulfills evaluation requirements mandated by federal policy to present evidence of the relevance, efficiency and effectiveness of Foreign Affairs, Trade and Development Canada's (DFATD) development expenditures; and to provide recommendations regarding future engagement with the institution.

The review of Canada's engagement with UNICEF identified the following strengths:

- UNICEF's efforts are highly relevant to two of Canada's international development priorities: increasing food security and securing the future of children and youth. UNICEF programs in health and education also indirectly contribute to Canada's third development priority of long-term sustainable economic growth.

- UNICEF increasingly engages in partnerships with civil society, national governments, and with other UN agencies, all of which contribute to the effectiveness of its programs.

- Canada is influential in advocating for strengthened humanitarian capacity within UNICEF.

- Canada uses its human and financial resources efficiently in its engagement with UNICEF, a conclusion echoed by both other donors and UNICEF.

The Canada-specific review identified the following areas of opportunity:

- UNICEF demonstrates progress in integrating gender equality into programming, but continues to lack sufficient performance measurement and reporting in this area. UNICEF's contribution to environmental sustainability and governance could not be assessed in this review.

- Progress has been made towards the achievement of Canada's strategic objectives for its engagement with UNICEF. However, DFATD has not developed performance measurement tools to track and assess its performance in achieving these objectives.

- Despite improvements in partnerships, UNICEF has occasionally demonstrated reluctance to engage in the wider agenda of UN reform, an effort that Canada and other donors actively champion.

- In its engagement with UNICEF, Canada has not emphasized the sustainability of programming, an area of weakness highlighted by UNICEF's own evaluations.

- Evaluating humanitarian action remains a challenge, even in country programs with a humanitarian focus.

The Canada-specific review concludes with recommendations for DFATD to consider in its engagement with UNICEF:

- DFATD should continue to emphasize the integration of gender equality in UNICEF programs and should highlight the importance of performance measurement and reporting in this area.

- DFATD should develop a performance measurement framework to assess progress on Canada's strategic objectives for its relationship with UNICEF.

- As Canada and other members of the General Assembly are seeking mechanisms to harmonize the UN development system, DFATD should continue to work with UNICEF to identify realistic and tangible ways to collaborate with other UN organizations.

- DFATD should encourage UNICEF to improve its sustainability planning. This could involve efforts to strengthen UNICEF's program design and implementation plans.

- DFATD should encourage UNICEF to increase its outcome-level reporting and evaluation of humanitarian assistance.

1.0 Introduction

1.1 Background

This report presents the results of a review of the effectiveness of development and humanitarian programming supported by the United Nations Children's Fund (UNICEF). It was commissioned by the Netherlands Ministry of Foreign Affairs and carried out by a team from Goss Gilroy Inc. of Ottawa, CanadaFootnote 3. In addition, a review of Canada's engagement with UNICEF has been undertaken by Foreign Affairs, Trade and Development Canada (DFATD), and is presented in chapter 5.

The common approach and methodology for reviews of this type were developed under the guidance of the Organisation for Economic Co-operation and Development's Development Assistance Committee (DAC) Network on Development Evaluation (DAC-EVALNET). The review relies on the content of published evaluation reports produced by UNICEF, supplemented with a review of UNICEF corporate documents and consultation with staff at UNICEF headquarters in New York.

The method uses a common set of assessment criteria derived from the DAC's evaluation criteria (Annex 1). It was pilot tested during 2010 using evaluation material from the Asian Development Bank and the World Health Organization. The overall approach and methodology were endorsed by the members of the DAC-EVALNET as an acceptable approach for assessing the development effectiveness of multilateral organizations in June 2011. The first full reviews using the approved methodology were conducted in 2011/2012. The Canadian International Development Agency (CIDA)Footnote 4 led the review of the United Nations Development Fund and the World Food Programme, with support and participation from the Netherlands Ministry of Foreign Affairs.

In 2012/13, another two development effectiveness reviews were carried out. Canada took the lead role in an assessment of the African Development Bank while the Netherlands Ministry of Foreign Affairs was responsible for the review of UNICEF. The review of UNICEF took place from October 2012 to May 2013. It included three rounds of consultations with UNICEF, including two days of meetings at project startup, a presentation and review of preliminary findings held in March 2013 and the presentation and discussion of the final report on May 6, 2013. The final report was also presented and discussed at a meeting of interested UNICEF donor agencies, hosted by the Netherlands Permanent Mission to the United Nations in New York City on May 7, 2013.

From its beginnings, the process of developing and implementing the reviews of development effectiveness has been coordinated with the work of the Multilateral Organization Performance Assessment Network (MOPAN). By focusing on development effectiveness and carefully selecting assessment criteria, the reviews seek to avoid duplication or overlap with the MOPAN process.

The intent behind the initiative is that EVALNET members engage in development reviews of multilateral organizations as distinct assessments in parallel to the MOPAN reviews. The planning of the reviews should take place in consultation with the evaluation department of the respective multilateral organization. The results of the development reviews will then be of use for the multilateral organizations and their stakeholders.

1.2 Purpose

The purpose of the review is to provide an independent, evidence-based assessment of the humanitarian and development effectiveness of UNICEF operations (hereafter referred to as "programs") for use by all stakeholders.

The current approach to assessing the development effectiveness of multilateral organizations was developed in order to address a gap in the information available to bilateral development agencies. While MOPAN provides regular reports on the organizational effectiveness of multilateral organizations, only in 2012 has it begun to examine development effectiveness and has not yet fully addressed the information gap this review is meant to fill. Other options such as large-scale, joint donor-funded evaluations of a given multilateral organization are much more time-consuming, more costly and result in a significant management burden to the organization being evaluated before, during and after such evaluations.

The current approach is intended to work in a coordinated way with initiatives such as the DAC-EVALNET/United Nations Evaluation Group Peer Reviews of United Nations organization evaluation functions. It also recognizes that multilateral organizations continue to make improvements and strengthen their reporting on development effectiveness. The ultimate aim of the approach is to be replaced by regular, evidence-based, field-tested reporting on development effectiveness provided by multilateral organizations themselves.

1.3 Structure of the Report

The report is structured as follows:

- Section 1.0 provides an introduction to the review and a general description of UNICEF as an organization;

- Section 2.0 presents a brief description of the approach and methodology used to carry out the review;

- Section 3.0 details the findings of the review in relation to six main criteria and nineteen sub-criteria of effectiveness in development programming and humanitarian action. Each section (one for each of the six main criteria) begins with a discussion of the level of coverage found for each sub-criterion. This is necessary to ensure transparency and that the reader understands the context for the findings. The short sub-sections on coverage are then followed by a detailed discussion of the results found in the evaluations, as they apply to the sub-criteria; and,

- Section 4.0 provides the conclusions of the review. As this is an external review of effectiveness, rather than an evaluation of UNICEF programs, the report does not include recommendations for UNICEF.

- Section 5.0 provides an assessment of Canada's engagement with UNICEF. It considers the relevance of UNICEF's objectives with Canada's international development priorities. It also considers Canada's performance in achieving its objectives for engagement with UNICEF, providing conclusions and recommendations to DFATD.

Box 1: Five UNICEF Program Focus Areas

- Young child survival and development

- Basic education and gender equality

- HIV/AIDS and children

- Child protection from violence, exploitation and abuse

- Policy advocacy and partnerships for children's rights

Medium-Term Strategic Plan, 2006 – 2013.

1.4 UNICEF: A Global Organization Focused on Equity for Children

1.4.1 UNICEF's Strategic Direction and Focus on Equity

UNICEF's mission statement reiterates its mandate from the United Nations to "advocate for the protection of children's rights, to help meet their basic needs and to expand their opportunities to reach their full potential."Footnote 5 Since 2006, it has been guided in carrying out this mission by a Medium-Term Strategic Plan,Footnote 6 which originally covered the period from 2006 to 2009 but has been extended twice and is now referred to as the Medium-Term Strategic Plan for 2006-2013.

The Medium-Term Strategic Plan sets out fifteen guiding principles, five program focus areas and two foundation strategies. The guiding principles link UNICEF's programming to normative documents such as the Convention on the Rights of the Child, the Convention on the Elimination of All Forms of Discrimination Against Women and the Millennium Declaration and Millennium Development Goals (MDG). They also commit the organization, inter alia, to a strategy of capacity development, working firmly within the United Nations system, and adapting program strategies to the needs of program countries.

The five program focus areas are a central organizing principle of the Medium-Term Strategic Plan (Table 1). They are intended to "have a decisive and sustained impact on realizing children's rights and achieving the commitments of the Millennium Declaration and Goals."Footnote 7

The Medium-Term Strategic Plan provides a description of the types of activities UNICEF will undertake and the programs it will support in each of its five focus areas (Table 1).

| UNICEF Focus Areas | UNICEF Program Contents |

|---|---|

| 1. Young child survival and development | Support in regular, emergency and transitional situations for essential health, nutrition, water and sanitation programs, and for young child and maternal care at the family, community, service-provider and policy levels. |

| 2. Basic education and gender equality | Focus on improved developmental readiness for school; access, retention and completion, especially for girls; improved education quality; education in emergency situations and continued leadership of the United Nations Girls' Education Initiative (UNGEI). |

| 3. HIV/AIDS and children | Emphasis on increased care and services for children orphaned and made vulnerable by HIV/AIDS, on promoting expanded access to treatment for children and women and on preventing infections among children and adolescents; continued strong participation in the Joint United Nations Programme on HIV/AIDS (UNAIDS). |

| 4. Child protection from violence, exploitation and abuse | Strengthening of country environments, capacities and responses to prevent and protect children from violence, exploitation, abuse, neglect and the effects of conflict. |

| 5. Policy advocacy and partnerships for children's rights | Putting children at the centre of policy, legislative and budgetary provisions by: generating high-quality, gender-disaggregated data and analysis; using these for advocacy in the best interests of children; supporting national emergency preparedness capacities; leveraging resources through partnerships for investing in children; and fostering children's and young people's participation as partners in development. |

In addition to the five focus areas, the Medium-Term Strategic Plan reiterates UNICEF's intention to build its capacities to respond to emergencies in a timely and effective manner. It notes that responding to humanitarian situations remains an essential element of the work of UNICEF.

Finally, the Medium-Term Strategic Plan includes a major commitment to the pursuit of equity by focusing on gender equality and the rights of the most vulnerable. It identifies a human-rights based approach to programming and promoting gender equality as "foundation strategies" for UNICEF.

Applying a human rights-based approach and promoting gender equality, as "foundation strategies" for UNICEF work will improve and help to sustain the results of development efforts to reduce poverty and reach the Millennium Development Goals by directing attention, long-term commitment, resources and assistance from all sources to the poorest, most vulnerable, excluded, discriminated and marginalized groups.Footnote 9

The principles, focus areas and foundation strategies outlined in UNICEF's Medium-Term Strategic Plan have been ratified by two Mid-Term Review Reports (2008 and 2010).Footnote 10

The most recent annual report of the Executive Director of UNICEF placed special emphasis on the organization's "refocus on equity":

The refocus on equity holds abundant promise for children, especially through faster and more economical achievement of the Millennium Development Goals. UNICEF emphasized the implementation of the refocus during 2011 at the local and national levels in partnership with governments, civil society organizations and United Nations partners. Measuring results for the most disadvantaged populations, particularly at the local level, has proven critical to accelerate and sustain progress in reducing disparities.Footnote 11

Thus, UNICEF's strategy for 2006-2013 has placed considerable emphasis on: addressing inequities faced by vulnerable children through programming in its five program focus areas; responding effectively to humanitarian situations; and pursuing foundation strategies of a human rights-based approach to programming and promoting gender equality.

1.4.2 UNICEF Operations and Program Expenditures

UNICEF is active in over 190 countries and territories through its programs and the work of national committees. In 2011, it had programs of cooperation in 151 countries, areas and territories: forty-five in sub-Saharan Africa; thirty-five in Latin America and the Caribbean; thirty-five in Asia; sixteen in the Middle East and North Africa; and twenty in Central and Eastern Europe and the Commonwealth of Independent States.Footnote 12

UNICEF's spending on program assistance in 2011 totalled $3,472 million. Over half of that amount went to the Young Child Survival and Development focus area. Similarly, 57% of total program assistance in 2011 was directed to sub-Saharan Africa, which has the majority of the world's Least Developed Countries.Footnote 13

Figure 1 shows the portion of UNICEF direct program expenditures in each focus area and in humanitarian action from 2009 to 2011.

Figure 1: UNICEF Expenditures, by Focus Area and Humanitarian Action, 2009-2011 Footnote 14

Figure 1 Text Alternative

1. Young child survival and development 33%

2. Basic education and gender equality 16%

3. HIV/AIDs and children 5%

4. Child Protection 7%

5. Policy advocacy and partnership 10%

6. Other 1%

7. Humanitarian Emergency 27%

Expenditures in the five focus areas are development expenditures funded with a) Regular Resources and b) Regular Resources – Other, using UNICEF's system for accounting for resources. Expenditures on humanitarian action are those financed by c) Other Resources – Emergency. The distribution of expenditures by type of funding from 2009 to 2011 is shown in Figure 2.

Figure 2: UNICEF Expenditures, by Type, 2009 - 2011 Footnote 15

Figure 2 Text Alternative

Regular Resources 24%

Other Resources - Regular 49%

Other Resources - Emergency 27%

1.5 Program Evaluation at UNICEF

One of the conditions for undertaking a development effectiveness review is the availability of enough evaluation reports of reasonable quality to provide an illustrative sample covering an agency's activities and programs (Annex 2). In order to satisfy that condition, the review team examined program evaluation policies and practices and the quality of evaluation reports produced and published by UNICEF. This short survey is by no means exhaustive and should not be read as an overall assessment of the function. It is only intended to establish the feasibility of conducting a development effectiveness review of UNICEF based on its own published evaluations.

1.5.1 Evaluation Policies and Practices at UNICEF

UNICEF's current evaluation policyFootnote 16 was prepared, at least in part, in response to the report of a peer review panel of international evaluation experts produced under the auspices of the DAC-EVALNET and the United Nations Evaluation Group in 2006. That report was just the second in the ongoing series of peer reviews of evaluation functions in the multilateral system. In its judgment statement, the report recognized the strength of UNICEF's Evaluation Office and the ongoing challenge of ensuring evaluation quality and adequate coverage in a decentralized system of program evaluation:

Evaluation at UNICEF is highly useful for learning and decision-making purposes and, to a lesser extent, for accountability in achieving results.

UNICEF's central Evaluation Office is considered to be strong, independent and credible. Its leadership by respected professional evaluators is a major strength. The EO has played an important leadership role in UN harmonization through the UN Evaluation Group.

The Peer Review Panel considers that a decentralized system of evaluation is well-suited to the operational nature of UNICEF. However, there are critical gaps in quality and resources at the regional and country levels that weaken the usefulness of the evaluation function as a management tool.Footnote 17

The 2008 evaluation policy for UNICEF retained the decentralized system wherein responsibility for different types of evaluations rested at different levels in the organization. You will below an overview of evaluation roles and responsibilities by organizational location at UNICEF as described in the policy.

Organizational Responsibilities for Program Evaluation at UNICEF Footnote 18

Organizational Location

Country Offices/Country Representatives

Evaluation Roles and Responsibilities

- Assign resources to evaluation

- Communicate with partners

- Prepare Integrated Monitoring and Evaluation Plan for the country office

- Provide quality assurance for meeting standards established by the Evaluation Office

- Ensure evaluation findings inform decision making process

- Follow up and report on the status of evaluation recommendations

Types of Evaluations Commissioned

- Local or project evaluations

- Program evaluations of elements of the country office program

Organizational Location

Offices/Regional Directors

Evaluation Roles and Responsibilities

Focuses on oversight and strengthening the capacity of country office evaluation functions by:

- Coordinating capacity building with the Evaluation Office

- Preparing regional evaluation plans

- Provide quality assurance and technical assistance to evaluations of country programs

Types of Evaluations Commissioned

- Country program evaluations

- Multi-country thematic evaluations

- Regional contribution to global evaluations

- Real-time evaluations of emergency operations

Organizational Location

Directors at Headquarters

Evaluation Roles and Responsibilities

- Prioritize evaluations for global policies and initiatives in their program areas.

- Ensure funding for programs funded by other resources.

Types of Evaluations Commissioned

- Global policies

- Global program initiatives

Organizational Location

Evaluation Office

Evaluation Roles and Responsibilities

- Coordinates evaluation function at UNICEF

- Collaborates with UNICEF partners in multi-party evaluations

- Promotes capacity development in evaluation in developing countries

- Provides leadership in development of approaches and methodologies for policy, strategic, thematic, program, project and institutional evaluations

- Maintains the institutional database of evaluations and promotes its use

- Conducts periodic meta-evaluations of the quality and use of evaluations at UNICEF

Types of Evaluations Commissioned

- Independent global evaluations

- Conducts evaluations of global policies and program initiatives as requested by Directors.

Organizational Location

Evaluation Committee

Evaluation Roles and Responsibilities

- Reviews UNICEF evaluations with relevance at global governance level

- Examines annual follow up reports on the implementation of recommendations

- Reviews the work program of the Evaluation Office

UNICEF reports annually to the Executive Board on the performance of the evaluation function. This report encompasses both the number and types of evaluations produced and progress in monitoring and improving the quality of the evaluations produced.

For the past three years, the quality of UNICEF evaluations produced by country and regional offices has been assessed independently by a contracted external agency applying a consistent methodology. The results of the Global Evaluation Report Oversight System (GEROS) are published annually. The results of the Global Evaluation Report Oversight System (GEROS) annual review are incorporated into the annual report on the evaluation function. Table 2 summarizes the evaluation results indicators reported in the 2012 report.

| Evaluation Performance Indicator | Results for 2011 |

|---|---|

1. Number of evaluations managed and submitted to the Global Database |

|

2. Topical distribution |

|

3. Type of evaluations conducted |

|

4. Quality of UNICEF evaluations | Global evaluation report oversight system (GEROS) Rating for evaluations assessed in 2011, conducted in 2010

|

5. Use of evaluation, including management responses |

|

6. Corporate-level evaluations |

|

The annual GEROS report represents an important effort by the Evaluation Office to monitor and influence the quality of evaluations produced by regional and country offices. This has been further strengthened by the development of a dashboard for summarizing information on the decentralized evaluation function (regional and country office level) which is part of UNICEF's Virtual Integrated System of Information (VISION) launched in January 2012.

The decentralized evaluation function dashboard is uploaded quarterly and presents (for each UNICEF regional office) information on: evaluation report submission rates; the quality of evaluation reports as assessed by Global Evaluation Report Oversight System (GEROS) in the previous year; management responses uploaded to the Global Tracking System; and the implementation rates for management responses.

What is interesting is the absence of a metric used to measure coverage of country office programs in the Virtual Integrated System of Information (VISION) dashboard for decentralized evaluation. The review team found (Section 2.0) considerable variation in the number of evaluations carried out over three years (2009 to 2011) by the country offices. Nonetheless, it is clear that UNICEF's Evaluation Office is engaged in ongoing efforts to upgrade the quality of decentralized evaluations.

Finally, it is worth pointing out that recent global evaluations managed by the Evaluation Office have been used by the Programme Division at UNICEF to inform the planning process for the 2014 to 2017 Medium-Term Strategic Plan.Footnote 20

1.5.2 Quality of Evaluation Reporting at UNICEF

Data on evaluation quality at UNICEF is available for the review from two sources: UNICEF's independent Global Evaluation Report Oversight System (GEROS) reports and the results of the review team's quality assessment of evaluations in the sample.

The latest report of the Global Evaluation Report Oversight System (GEROS) was produced in 2012 and covers 87 evaluation reports produced by UNICEF in 2011. It reports a three-year improving trend with 42% of reports meeting UNICEF evaluation standards in 2011 versus 40% in 2010 and 36% in 2009.Footnote 21

The review took the Global Evaluation Report Oversight System (GEROS) ratings into account when developing the evaluation sample as noted in Annex 3. There are four evaluation report quality ratings used in the Global Evaluation Report Oversight System (GEROS) system: Not confident to act; Almost confident to act; Confident to act; and, Very confident to act. In developing a purposive sample of the evaluation reports produced from 2009 to 2011, the review team included only those evaluations rated almost confident to act or better. The review team assessed a total of 70 evaluations for quality and rejected only four for quality reasons. Four others were not included in the review for other reasons (Section 2.1 and Annex 3).

1.6 Monitoring and Reporting Results

1.6.1 Elements of UNICEF's Results Monitoring and Reporting Systems

In recent years, UNICEF has invested considerable effort in modifying and strengthening its systems and processes for monitoring and reporting on the results of programs, and for allocating resources to specific results. Currently, some of the most notable elements of the system include the Monitoring Results for Equity Systems (MoRES), the Virtual Integrated System of Information (VISION) and the Executive Director's annual reports on progress and achievement against the Medium-Term Strategic Plan.

Monitoring Results for Equity Systems

The Monitoring Results for Equity Systems (MoRES) system is one of the core elements in UNICEF's "refocus on equity" which began in 2010 as an initiative of the Executive Director. Monitoring Results for Equity Systems (MoRES) is UNICEF's conceptual framework for planning, programming, implementation and management of results.Footnote 22 It is intended to address the need for intermediate process and outcome measures between the routine monitoring of program and project inputs and outputs on one hand and the monitoring of higher level outcomes; which is done using internationally agreed indicators every three to five years.

The conceptual model for Monitoring Results for Equity Systems (MoRES) includes four levels of country office programmatic actions, each linked to different planning, monitoring, and evaluation activities. Level three monitoring, which is the primary focus of MoRES, is focused on the questions: "Are we going in the right direction? Are bottlenecks and barriers to equity changing?"Footnote 23

In order to undertake level three monitoring, country offices must first identify country specific indicators relating to ten determinants of bottlenecks hindering equity for children. These determinants are gathered under four major headings: the quality, demand for, and supply of services; and the enabling environment.

In 2012, the system of indicators for results in humanitarian action at UNICEF, the Humanitarian Performance Monitoring System, was incorporated into Monitoring Results for Equity Systems (MoRES) to allow for a single, integrated system of performance monitoring.

It is worth noting that the conceptual model of Monitoring Results for Equity Systems (MoRES) includes the evaluation function as the means of verifying final outcomes and impacts of programming at country level.

The global two-year management plan for Monitoring Results for Equity Systems (MoRES) was adopted by UNICEF in January 2012, and the level three monitoring module was implemented by 27 country offices in the March-June period of the same yearFootnote 24. Mainstreaming of Monitoring Results for Equity Systems (MoRES) into the annual review process took place in the last half of 2012. As an important element of the refocus on equity, Monitoring Results for Equity Systems (MoRES) is to be the subject of a formative evaluation managed by the Evaluation Office in 2013.

Virtual Integrated System of Information

The Virtual Integrated System of Information (VISION) system, fully launched in January 2012, allows UNICEF offices at all levels to develop and input data on different types and levels of results. Perhaps most importantly it links together budgeting data and key results so that country offices can now input their budget information under key UNICEF results areas rather than program expenditure activities.

A key component of VISION is its Results Assessment Module which can be used to extract information on program planned results and compare those to reported achievements. During 2011, Monitoring Results for Equity Systems (MoRES) level three monitoring elements were integrated into Virtual Integrated System of Information.

Annual Report of the Executive Director of UNICEF: progress and achievement against the medium term plan

The Executive Director of UNICEF reports annually on the progress made and achievements secured against the key results identified in the Medium-Term Strategic Plan. This report combines information on the global trends of indicators relating to child-specific Millennium Development Goals (and other global, regional and country-specific indicators of child welfare and disparity) with examples of UNICEF's contribution to results in each of the five focus areas.

The annual report also addresses developments in the implementation of programme principles and strategies and on internal measures of programme performance. In 2012, the report highlighted developments in evaluation, country office efficiency, shared services with other UN agencies, and new initiatives in recruiting and human resource management.

The annual report is supported by a large data companion report which tracks a significant number of key performance indicators across the five focus areas and humanitarian action.

1.6.2 Strengths and Weaknesses of the Results Monitoring and Reporting System

The main strength of UNICEF's results monitoring and reporting system as it currently stands is its multi-layered nature and its ability to link standardized categories of results to resource allocations and budgets through the Virtual Integrated System of Information (VISION) system. With Monitoring Results for Equity Systems (MoRES) level three monitoring integrated into the Virtual Integrated System of Information (VISION) system there should be strong linkages from development and humanitarian program inputs to the intermediate level of results.

What is lacking currently from the annual report of the Executive Director and its data companion is a clear explanation of how UNICEF's activities and its support to programs contribute to the higher level outcomes and impacts being reported. The contribution is asserted and examples are given, but it is not clear how the link between UNICEF's contribution and the outcomes is established. At the same time, it is important to recognize that Monitoring Results for Equity Systems (MoRES), by focusing on determinants of inequality for children and addressing indicators of intermediate results for UNICEF-supported programs, goes some way in addressing the issue of UNICEF's contribution.

The need for strengthened monitoring is recognized in Programme Division's recent work on the challenges of the upcoming Medium-Term Strategic Plan.Footnote 25

Monitoring and demonstrating tangible results is also central for UNICEFs results and performance-based management and reporting to donors, particularly in the light of increasing competition for humanitarian and development funding. The expansion of the assessment, monitoring, analysis and evaluation functions across country offices and Government partners will require additional investments in staff, training and technical assistance. The next Medium-Term Strategic Plan should emphasize monitoring as a key program function and highlight the investments needed to improve monitoring functions on the ground.

One development which may potentially strengthen the link between higher-level outcomes and UNICEF's contribution is the explicit emphasis in Monitoring Results for Equity Systems (MoRES) of the role which evaluation can play in verifying outcomes and impacts of country level programming. This will depend, of course, on the development of credible theories of change for country programs and their elements. To some extent, the Monitoring Results for Equity Systems (MoRES) model addresses this question through its identification of determinants of equity. However, this is not yet made explicit in reporting on the progress against the Medium-Term Strategic Plan.

In summary, UNICEF is investing considerable time and effort into the development of quite new systems for results monitoring which are being implemented at all levels of the organization. They hold out the promise of strengthened results reporting which could negate the need for development effectiveness reviews of this type in the future. That could depend on how well the evaluation function is able to be incorporated into the system as a means of verifying UNICEF's contribution and testing the validity of theories of change.

2.0 Methodology

This section describes the methodology used for the review, including the identification of the population and the sampling process, the review criteria, the review and analysis processes and other data collection used. It concludes with a discussion of the limitations of the review.

2.1 Evaluation Population and Sample

A population of UNICEF evaluations was identified from two sources:

- Office of Evaluation website; and,

- Three oversight reports from the assessment of evaluations in UNICEF's Global Evaluation Reports Oversight System (GEROS), covering evaluations conducted from 2009 to the end of 2011.

These sources identified a population of 341 evaluations. It was decided, in consultation with UNICEF's Office of Evaluation, that the review should focus on evaluations conducted since 2008. UNICEF had adopted a new evaluation policy in that year and it was expected that evaluations after 2008 would reflect this new policy. It was also determined that the sample would not include those evaluations deemed, in the GEROS quality rating, to be of poor quality and "not confident to act." Evaluations that focused on global programming were not included in the population of evaluations for the quantitative review, but included in a qualitative review by senior team members. In addition, some evaluations in the population were duplicates, were not deemed to be evaluations or did not focus on UNICEF programming and were dropped from the population. This resulted in a population of 197 from which to draw the sample.

A sample of 70 evaluations was drawn from the population, with the intention of having 60 evaluations that would pass the quality review and be available for rating. Initially the sample was random, stratified by Medium-Term Strategic Plan theme area. Then the sample was adjusted manually to ensure adequate coverage on two other dimensions: region and type of country (least-developed or middle-income countries). The resulting sample was no longer random, but rather a purposive sample that is illustrative of UNICEF's programming across a number of dimensions. Eight evaluations were eliminated from the initial sample – four because they did not pass the quality review, two because they did not cover UNICEF programming or address program effectiveness and two because they covered activities that were already covered in other evaluations. This left a sample of 62 evaluations that were rated by the team (Annex 2).

The key characteristics of the population and the sample include:

- Evaluations in the population and sample were evenly spread across the three years 2009 – 2011;

- Evaluations in the population and sample were split among the regions in which UNICEF is programming and between middle- and least-developed countries. However, in the sample there were slightly more evaluations of programming in least-developing countries in West and Central Africa than were represented in the population;

- Evaluations in the population and sample covered all priority areas of UNICEF programming, including all themes in the Medium-Term Strategic Plan and humanitarian action. However, not all types of UNICEF programming are covered equally by evaluations in the population. Relatively few evaluations in the population covered humanitarian action; whereas this programming accounted for just over one-quarter of UNICEF expenditures in 2009 - 2011. This is also reflected in the fact that, of the countries receiving the largest amounts of humanitarian funding in 2009 - 2011, very little of their humanitarian action was subject to evaluation. In addition, five of the humanitarian evaluations included in the sample were for programming in response to the same humanitarian crisis – the 2004 Indian Ocean tsunami;

- The evaluation population and sample also under-represented evaluations of humanitarian actions managed by UNICEF at the Country Office level (Level One), which are quite numerous.Footnote 26 All but one of the evaluations of humanitarian action included in the sample covered UNICEF Level Three emergencies. Thus, the findings on humanitarian action are all concerned with emergencies managed by UNICEF at the global or regional level.

- Only a small percentage of UNICEF's evaluations cover programming in the countries receiving the largest amounts of development or humanitarian funding in 2007 - 2011. It is recognized that the evaluations cover funding periods that span from 2000 to 2011 and the profile of spending in the years prior to 2007 may have been different. However, the under-representation of the largest funded countries in the population does suggest that UNICEF's is not achieving strong coverage of its largest amounts of development or humanitarian funding. The sample attempted to compensate for this somewhat, by including a larger number of evaluations from these countries than would be expected for the distribution of the population.Footnote 27

See Annex 3 for details of the comparison of the evaluation population and sample.

It was not possible to identify the value of UNICEF programming that was covered by the evaluation sample. While the review process required the raters to identify the overall costs of the programs, this information was found in only 34 evaluations and the nature of the information presented was inconsistent across evaluations.Footnote 28

Apart from the fact that the evaluations do not cover the countries receiving the largest amounts of funding, or the full range of humanitarian action, the evaluations covered in the sample are, in all other aspects, illustrative of UNICEF's global programming.

2.2 Criteria

The methodology does not rely on a particular definition of development and humanitarian effectiveness. As agreed with the Management Group and the Task Team that were created by the DAC-EVALNET to develop the methodology of these reviews, the methodology focuses on some essential characteristics of effective multilateral organization programming, derived from the DAC evaluation criteria:

- Programming activities and outputs would be relevant to the needs of the target group and its members;

- The programming would contribute to the achievement of development objectives and expected development results at the national and local level in developing countries (including positive impacts for target group members);

- The benefits experienced by target group members and the development (and humanitarian) results achieved would be sustainable in the future;

- The programming would be delivered in a cost efficient manner;

- The programming would be inclusive in that it would support gender equality and would be environmentally sustainable (thereby not compromising the development prospects in the future); and,

- The programming would enable effective development by allowing participating and supporting organizations to learn from experience and use of performance management and accountability tools, such as evaluation and monitoring to improve effectiveness over time.

Box 2: Assessment Criteria

- Relevance of Interventions

- The Achievement of Humanitarian and Development Objectives and Expected Results

- Cross Cutting Themes (Environmental Sustainability and Gender Equality)

- Sustainability of Results/Benefits

- Efficiency

- Using Evaluation and Monitoring to Improve Humanitarian and Development Effectiveness

The review methodology, therefore, involves a systematic and structured review of the findings of UNICEF evaluations, as they relate to six main criteria (Box 2) and 19 sub-criteria that are considered to be essential elements of effective development and humanitarian programming (Annex 1).

2.3 Review Process and Data Analysis

Each evaluation was reviewed by one member of a small review team that included three reviewers and two senior members (including the team leader).Footnote 29 Each team member reviewed a set of evaluations. The first task of the reviewer was to assess the quality of the evaluation report to ensure that it was of sufficiently high quality to provide reliable information on development and humanitarian effectiveness. This was done using a quality scoring grid (Annex 3). If the evaluation met the minimum score required, the reviewers continued to provide a rating on each sub-criterion, based on information in the evaluations and standard review grid (Annex 4). They also provided evidence from the evaluations to substantiate the ratings.

All efforts were made to ensure consistency in the ratings by team members. The reviewers were trained by the senior team members; two workshops were held at which all team members reviewed and compared the ratings for the same three evaluations, a mid-term workshop was held to address any issues faced by the reviewers, and, following completion of the reviews and the documentation of the qualitative evidence to support the ratings, the team leader reviewed all ratings to ensure there was sufficient evidence provided and it was consistent with the rating. Senior team members then reviewed the qualitative evidence for each sub-criterion to identify factors contributing to, or detracting from, the achievement of the sub-criteria.

2.4 Other Data Collection

After the rating of the evaluations in the sample, further information about UNICEF's effectiveness was also gleaned from a qualitative review of the global evaluations conducted in the 2009 - 2011 period. These were not included in the sample for rating in order to avoid double-counting results. Some global evaluations included evidence drawn from other evaluations and, as such, there was a risk that evaluative information on the same program would be counted twice. The separate review of the global evaluations also gave them a higher profile in the analysis of the findings for the six criteria.

The review of evaluation reports was also supplemented by a review of UNICEF corporate documents and interviews with UNICEF staff. These were done to contextualize the results of the review. (A list of the global evaluations and documents consulted is provided in Annex 5.)

2.5 Limitations

As with any meta-synthesis, there are methodological challenges that limit the findings. For this review, the limitations include sampling bias, the challenge of assessing overall programming effectiveness when important variations in programming exist (for example, evaluations covering multiple programming components or only a specific theme or project as part of a program area) and the retrospective nature of a meta-synthesis.

Sampling Bias

The sample selected for this review is not a random sample. Even as a purposive sample, it is still illustrative of UNICEF-supported programming. However, caution must be exercised in generalizing from the findings of this review to all UNICEF programming. In addition, although the UNICEF's evaluations cover a range of countries and each focus area, Medium-Term Strategic Plan there are fewer evaluations of programming in countries receiving the largest amounts of UNICEF funding and its humanitarian action than would be expected, given its proportion of overall funding at UNICEF. Howeve r, the review did attempt to compensate for this by over-sampling from countries with the largest amounts of funding and those with humanitarian crises. As already noted, neither the population nor the sample included evaluations of small (Level One) humanitarian actions at UNICEF.

There was generally adequate coverage of the criteria. Seventeen of the nineteen sub-criteria used to assess development and humanitarian effectiveness are sufficiently well covered in the evaluations included in this report. Two received a weak coverage rating and their results are not reflected in this report.

Variations in Programming

The review was not able to report systematically on the effectiveness of UNICEF's programming by focus area or by type of country. There were not sufficient evaluations in each focus area or type of country included in the meta-synthesis to allow for them to be analyzed separately. In addition, some evaluations cover multiple types of programming in the same evaluation. This means that it is not possible to draw conclusions by type of programming or type of country. Although not analyzed separately, where qualitative observations can be made about the focus area and/or the country type, they are reflected in the report. However, these observations should be treated with caution, as they can only be illustrative. The quantitative findings, where appropriate, for these analyses are reported in Annex 6.

Retrospective Nature of Meta-synthesis

Evaluations are, by definition, retrospective and a meta-synthesis is even more retrospective, as it is based on a body of evaluations that address policies and programming implemented over a much earlier period of time. UNICEF's evaluations published in 2009 - 2011, covered years beginning in 2000 and running to 2011.Footnote 30UNICEF's policies, strategies and approaches to programming have changed over these years, but the changes will not be reflected in all the evaluations. For this reason, the findings may be somewhat dated. To the extent possible, the review addressed this through observations gleaned from recent interviews with UNICEF staff and a review of UNICEF documents.

3.0 Findings on UNICEF's Development Effectiveness

This section presents the results of the review as they relate to the six main criteria and their associated sub-criteria. For each criterion, the report presents firstly the extent to which the review sub-criterion was addressed in the evaluation reports (coverage). Then the results are presented (findings) – including both the quantitative findings for each sub-criterion and the results of the qualitative analysis of the factors contributing to, or detracting from, the achievement of the sub-criteria. This section also includes evidence from global evaluations that were not included in the quantitative review.

In reporting on the factors, the report makes use of the terms "most", "many", "some" and "few" to describe the frequency with which an observation was noted, as a percentage of the number of evaluations for which the sub-criterion was covered (Box 3). In addition, for the most part, the order in which the factors are presented reflects the frequency with which they were mentioned.

Box 3: Frequency of Observations

Most = over three-quarters of the evaluations for which the sub-criterion was covered

Many = between half and three-quarters

Some = between one-fifth and half

Few = less than one-fifth